1. INTRODUCTION

Journal papers are a highly representative form of scholarly research source. Individual journal papers comprise an assembly, a so called journal, and progressively generate an assembly that is known as research field. Bellis (2009) depicted learning as a mosaic or jigsaw puzzle comprising a certain number of individual units (documents) growing together into subject related repositories (journals) that, in turn, evolve into the documentary source of well-established, eventually institutionalized specialties and disciplines. For these reasons, academic journals have been widely utilized as experimental data in academic research areas, and citation relationships of the journal papers are used as analysis methods.

Research studies on the relations among the papers and performance evaluation of the papers have been done with quantitative analysis of cited references, such as co-citation, and bibliographic coupling was then used to create a map of influence to comprehend the subjective themes of the paper (Bellis, 2009). Subject-based analysis is a promising interest for research as it has potential practicality in research and generates quantitative data to provide direction for scientific research in the future. In particular, in parallel to the SciVal database, it further enables searches in leading-edge areas and frail research areas among the research clusters to improve research performance and seek research collaboration in those areas.

Subject-based analysis is generally based on bibliographic subjects, journals, and research areas in which papers are published. This research is aimed at finding the extent of influence by a paper, by tracking the citing paper of a specific paper from the journal in the particular research areas. Citation Index study can be subdivided, based on the features of the citation, into Cited reference study and Citing Paper study. Cited reference study is research on previously-published research papers that is based on evidence and source of paper, while Citing Paper study is based on the impact and distribution over the fields of study.

This research is concerned about citing papers, and papers in the selected journal having citations of target journal papers are tracked. The research aims to indicate the field of application of target journal papers and to determine whether significant variation in the scientific fields of the citing paper occur over publication years. While recent studies on accumulated outcome (scholar journals) is in progress as an upcoming research trend (Astrom, 2007; Huang & Chang, 2014), this study focuses on the subject-relation of the paper and its cited paper, apart from general citation studies that utilize paper-paper referencing.

By focusing on the relationship between the representative research field and the other research fields of the scholar journal, this study aims to determine: i) whether subject impact relations is over time; and ii) whether there is significant change in subject impact factor, even within a specific research field, that depends on time of research.

2. RESEARCH METHODOLOGY

Citation relationships between journal papers can be represented by Citing Reference and Citing Paper metrics. Citing Reference refers to the database of the formerly existing research resources that are used as either reference or co-citing papers, papers citing with the given paper. Citing Paper refers to the research material that utilizes the reference of published papers. In the citation relation of target journal papers, citing papers are the papers contributing to the development of the target journal papers, while citing papers are the ones that, along with many other papers, cite the target journal papers. Therefore, target journal papers can be considered to have contributed to the developmental process of the citing papers. This study takes a statistical approach to examine how internationally published papers of field-specialized target journals are utilized in other research fields and how target journal papers citing in other journals are analyzed to estimate the contribution to field of target journal papers.

The major analysis tools used were clustering and One-way analysis of variance (One Way ANOVA); through the cluster of target journal papers’ subject and the cluster of the citing paper’s field, the research fields of the papers and citing papers were realized. With the use of One-way analysis of variance, we aim to analyze if there is significant relative deviation in contribution to progress in the field of citing paper by publication year of journal paper.

The clustering technique used for the establishment of subject areas in this studies is VOSviewer clustering, which enables massive data processing in the similar manner of multidimensional scaling. VOS (Visualization Of Similarities) was first developed in the Netherlands in 2006. It has been progressively advanced by research and widely used by researchers in the field of biblimetrics (Van Eck & Waltman, 2010; Waaijer, van Bochove, & van Eck 2010; Waltman, van Eck, & Noyons 2010; Heersmink et al., 2011; Lee, 2011; Leydesdorff & Rafols, 2011; Lu & Wolfram, 2010; Su & Lee, 2010; Tijssen, 2010).

The journal Information Systems Research (5-Year Impact Factor=4.276) is chosen as a target journal with the highest impact factor in the research field of “Information Science & Library Science,” and to determine yearwise contribution variation, papers published in the years of 2001, 2004, 2007, and 2010 have been selected. All of the experiment data was collected from Web of Science (http://webofknowledge.com) in July 2014.

In this study, first of all, the characteristics and research field of target journal papers are grouped by year and second, the characteristics and research field of the citing papers of the group, and last, this was proceeded with determination of significance of variation, by the yearwise experiment group of papers, in the citing fields. The research fields used for this studies are subject categories from JCR (http://webofknowledge.com/JCR).

3. TARGET JOURNAL ARTICLES

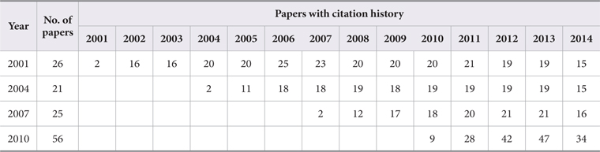

Articles from Information Systems Research selected for the experiment total 128 in number, among which 124 have citation history (96.9%). Articles with citation history, as shown in Table 1, demonstrate stable citation after three or four years of publication. Comparatively, papers in 2010 have shown comparable increase in number of citing papers, compensating for the more than doubled number of published papers.

3.1. Citation Performance of Experimental Articles

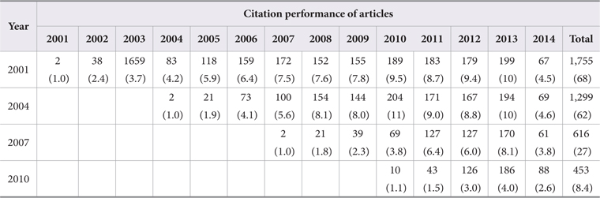

Table 2 shows citation performance as the average citation index of the experimental articles. Citation performance appears to have a pattern of increment and stabilization after journal publication. The citation performance of papers from 2001 kept a constant level from 2006, the sixth year of publication. Papers in 2004 and 2007 showed an average citation performance maintained from their fifth year, 2008 and 2011, respectively. Interestingly, in more than 10 years of tracking of papers of 2001 and 2004, 2004-issued papers in 2008 (i.e. the fifth year of issue) show a trend of increasing citation performance that exceeds that of 2001-issued papers and then showed a descent below performance of 2001-issued papers in 2012 (i.e. ninth year) and currently show an indiscriminately small increase over the performance of 2001-issued ones. This trend of fluctuating research reference performance of the papers suggests dissemblance in the research field, to which each of the annual journal issue provides contribution.

With constant citation performance for more than 10 years, our target journal Information Systems Research falls into the research field categorization of social science – “Information Science & Library Science,” as well as “Operations Research & Management Science.”

Among the experimental articles, as far as the papers citing for the longest period of time are concerned, are: in 2001, “Research commentary: Technology-mediated learning - A call for greater depth and breadth of research” (2001–2014, 83 cases): in 2004, “IT outsourcing strategies: Universalistic, contingency, and configurational explanations of success” (2004-2014, 91 cases), “Building effective online marketplaces with institution-based trust” (2004-2007, 270 cases): in 2007, “Is the world flat or spiky? Information intensity, skills, and global service disaggregation” (2007-2014, 33 cases): in 2010, “Ex ante information and the design of keyword auctions” (2010-2014, 22 cases), “Quality uncertainty and the performance of online sponsored search markets: An empirical investigation” (2010-2014, 15 cases), and “Information transparency in business-to-consumer markets: Concepts, framework, and research agenda” (2010-2014, 10 cases): while the most-citing paper in 2001 is “Research commentary: Desperately seeking the ‘IT’ in IT research - A call to theorizing the IT artifact” (355 cases, 2004-2014): in 2004, “Building effective online marketplaces with institution-based trust” (270 cases, 2004-2014): in 2007, “Through a glass darkly: Information technology design, identity verification, and knowledge contribution in online communities” (98 cases, 2008-2014): and in 2010, ‘The new organizing logic of digital innovation: An agenda for Information Systems Research” (25 cases, 2012-2014 ).

3.2. Subject Analysis of Experimental Articles

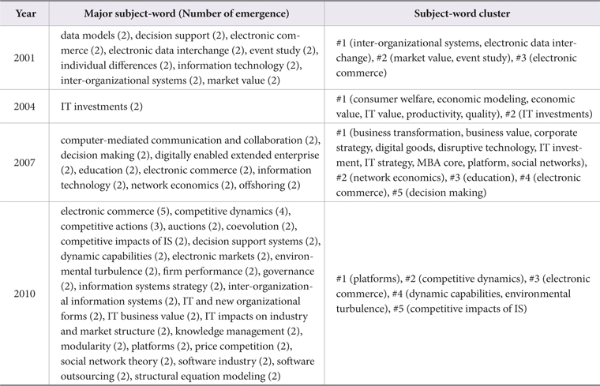

Subject-words (“Author Keywords” field of Web of Science record) of experimental articles used are 697, appearing in 782 cases; by mean, around 6.11 subject-words were used per paper. Major subject-words discovered were “electronic commerce” (9 cases), “information technology” (6 cases), “information systems strategy,” “competitive dynamics,” “decision support systems,” “electronic markets,” “resource-based view” (4 cases), “knowledge management,” “competitive actions,” “structural equation modeling,” “decision support,” “decision making,” “information systems development,” “business value,” “inter-organizational information systems,” “competitive impacts of IS,” “partial least squares,” and “coordination” (3 cases), which are demonstrated in Table 3 as the year-wise cluster of subject-words for the issued journal. The subject-word cluster showed similar result when comparing the subject-word cluster for the entire experimental article to the subject-word cluster for experimental articles with citation performance. It seems because articles with citation performance are 96.9% of the entire articles in the journal.

The number of cluster in earlier years shows a greater subject-word cluster: for the year 2001, 3 clusters and in 2004, 2 clusters; for both 2007 and 2010, 5 clusters. Entire research fields were separated into 100 classes on the basis of ISI Categories, which proved to be problematic in distinguishing subject difference on year of publication, by means of subject-word and subject-word cluster of experimental articles.

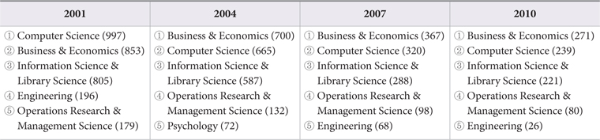

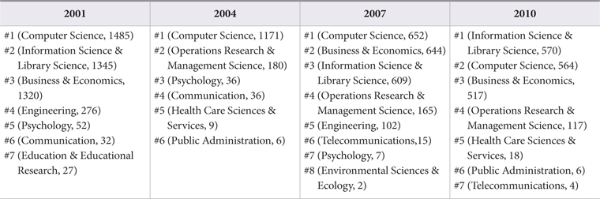

4. ANALYSIS OF CITING PAPER

Papers citing the experimental articles are found in a total of 5,836, citing 7,784 articles. This accounts for 1.3 experimental articles referenced per citing paper. There are 613 cases of citing more than two experimental articles, and the highest number of citation had 8 experimental articles referenced. Citing papers are of 475 types: ranked from highest to lowest as MIS Quarterly (337), Information Systems Research (273), Journal of Management Information Systems (225), Decision Support Systems (189), and European Journal of Information Systems (161). Research fields of citing paper are in the order of Computer Science (2221), Business & Economics (2191), Information Science & Library Science (1901), Operations Research & Management Science (489), and Engineering (402). Table 4 illustrates the citing field (1st-5rd) of experimental articles based on their publication year. The whole (2001, 2004, 2007, and 2010) of experimental articles showed great similarity in citing fields. Nevertheless, the number of citing papers per research fields tends to decline with increase in year of experimental articles publication: 2001 (43), 2004 (33), 2007 (26), and 2010 (23). It indicates the change either in research field of experimental articles research fields or in impact of experimental articles in research field.

4.1. Research Field Cluster of Citing Paper

To analyze the research field of citing papers of experimental articles, clustering and statistical methods are applied to four groups (2001, 2004, 2007, and 2010) of experimental articles to attain the extent of contribution to the field. Each cluster of research field was titled after the research field with the highest weight. There are 8 clusters in total of four experimental groups: #1 (Computer Science), #2 (Business & Economics), #3 (Operations Research & Management Science), #4 (Psychology), #5 (Communication), #6 (Telecommunications), #7 (Oceanography), and #8 (Science & Technology - Other Topics). The aforementioned top 5 fields in research field analysis – “Information Science & Library Science” and “Engineering” did not name the cluster, but instead are included as cluster items: “Information Science & Library Science” is under cluster #1 (Computer Science), and “Engineering” is under cluster #3 (Operations Research & Management Science).

Clustering of citing research field by four groups of publication year of experimental articles, shown in Table 5, disclose the distinct nature of research fields for each group. 2004’s citing field cluster has “Information Science & Library Science” and “Computer Science” clustered together; however, they are differently clustered in other year-groups. Also, “Engineering,” clustered with “Operations Research & Management Science” in 2001, 2004, and 2010, but didn’t cluster with it in 2007.

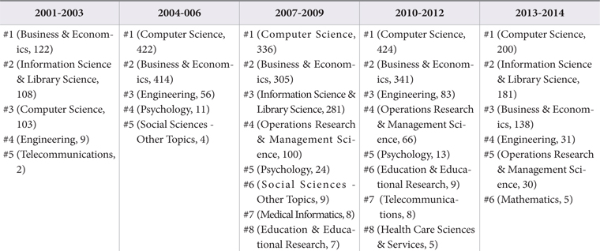

Research field clusters of citing paper for the 4 yeargroups within the three-year unit of experimental paper publication is shown in Table 6. As the time passes, amount of cluster increases. Specifically, as noticed with number of citation in Table 2, number of cluster remains stabilized from six year after the publication.

However, experimental articles maintaining a stable citation index and research field group alone cannot lead to stability in contribution to research field. To prove this, chronological variation in research field must be considered.

Comparisons of groups of citing papers from 3 consecutive years of experimental article publication were made to conduct comparative analysis. Table 7 displays the cluster in 2001-issued experimental articles on citing fields, where chronological variation in the citing field is apparent. The most citing research fields are 1st “Computer Science,” 2nd “Business & Economics,” 3rd “Information Science & Library Science,” 4th “Operations Research & Management Science,” and 5th “Engineering.” From the citing field of 2001-2003, OP belongs to #1 (Business & Economics) cluster and IS belongs to cluster #2, but from 2004-2006, OP is in cluster #3 and IS is in cluster #1. During a period of stable experimental article citation performance, 2007-2009 and 2010-2012, the citing field cluster number stays at 8 but composition of cluster differed. In the case of ENG, it was placed in #4 (Operations Research & Management Science) in 2007-2009 while forming a new cluster in 2010-2012. IS formed another cluster #3 (Information Science & Library Science) in 2007-2009; however, it fell into #1 (Computer Science) in 2010-2012. Moreover, one interesting note here is that besides major citing field, Psychology, Social Science, Education & Educational Research, and Telecommunications appeared as new clusters and disappeared with time passage.

4.2. Variation in Citing Field on the Publication Year of Experimental Articles

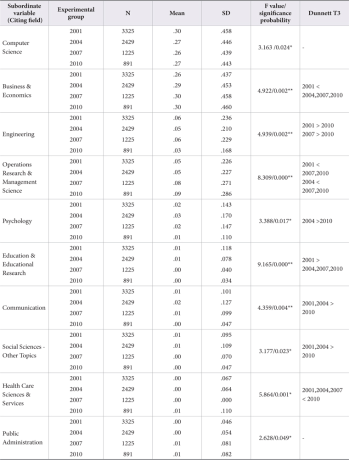

As mentioned before, 4 groups of experimental articles (2001, 2004, 2007, and 2010) showed varied citing field. In a further step of the study, One-way analysis of variance table was used in statistical interpretation of variation in research field based on publication year, as shown in Table 8. In the top 10 ranking of research field (citing field) are “Computer Science,” “Business & Economics,” “Information Science & Library Science,” “Engineering,” “Operations Research & Management Science,” “Psychology,” “Education & Educational Research,” “Communication,” “Social Sciences - Other Topics,” and “Health Care Sciences & Services,” along with individual clusters of research field from citing field cluster, such as “Telecommunications,” “Public Administration,” “Environmental Sciences & Ecology,” and “Geography.”

Citing fields showed significant variation in citing field among the experimental group, except for “Information Science & Library Science,” “Telecommunications,” “Public Administration,” “Environmental Sciences & Ecology,” and “Geography”. In analysis of the field “Business & Economics”, it showed that the higher the year of the experimental group was, the greater the average score was, and inter-group variation by Dunnett T3 appeared the significant difference between the group 2001 and the groups 2004, 2007 and 2010.

“Operations Research & Management Science” and “Health Care Sciences & Services” fields also show a similar pattern of correlation between year and average score. Confirmation testing for “Operations Research & Management Science” proved the group of 2001 and 2004 had significant difference from the group of 2007 and 2010, whereas for “Health Care Sciences & Services” the group of 2001, 2004, and 2007 differed from the group of 2010.

On the contrary, “Education & Educational Research” and “Social Sciences - Other Topics” fields showed an opposite trend of decreasing average as the year of group grew later. The “Education & Educational Research” field group of 2001 showed significant different from the group of 2004, 2007, and 2010, whereas the “Social Sciences - Other Topics” group of 2001 and 2004 differed from the group of 2007 and 2010.

In summary, the target journal papers group in the past few years of time had a high contribution (average score) in the fields of “Business & Economics,” “Operations Research & Management Science,” and “Health Care Sciences & Services,” and a low contribution (average score) in the fields of “Education & Educational Research” and “Social Sciences - Other Topics.” It is known that the study-target journal Information Systems Research, which is classified in fields of “Information Science & Library Science” and “Operations Research & Management Science” in JCR (Journal Citation Report), has been contributing to a higher extent in recent years to the fields of “Business & Economics,” “Operations Research & Management Science” and “Health Care Sciences & Services,” while contribution in the fields of “Education & Educational Research” and “Social Sciences - Other Topics” are reduced in recent years.

5. CONCLUSION

An academic article, from its birth, necessitates the accumulation of numerous reference sources. Thereafter, articles are utilized by following research, to create other articles, and this process replicates. The objective of this study is to help understand the current status of article reference by chronological analysis and to determine along with Clustering and One-way analysis of variance, so to determine significant change in the citing research field of the journal with the change of publication year.

The target journal of the study was Information Systems Research, which has the research fields of “Information Science & Library Science” and “Operations Research & Management Science,” from which analysis of issued papers in 2001, 2004, 2007, and 2010 shows citation performance enhances from paper publication and from the time of 3-4 years after publication, the number of citing papers remains stabilized. This trend is also shown the similar result in the citing field cluster.

The research fields of citing papers are ranked as Computer Science (2221), Business & Economics (2191), Information Science & Library Science (1901), Operations Research & Management Science (489), and Engineering (402). However, the ranking differed as the publication year of experimental articles changed citing field; especially in the cluster study the difference can be explained in detail. Variation in group of citing field was also observed: the highest citing research fields are Computer Science (2221), Business & Economics (2191), Information Science & Library Science (1901), Operations Research & Management Science (489), and Engineering (402). In the case of the citing field “Information Science & Library Science,” seemingly in high correlation with “Computer Science” it formed separate individual clusters in 2004, suggesting a timely variation research field cluster that depends on time and similarities of cluster.

Analysis of research field of citing papers with 4 groups of experimental articles - 2001, 2004, 2007, and 2010 - showed a significantly distinct contribution made to citing field. The citing field “Business & Economics” in 2001 revealed a significantly small contribution compared to all other year-groups, such that papers published after the year of 2001 showed a greater contribution to “Business & Economics” than in the past. The citing field “Operations Research & Management Science” showed 2001, 2004 <2007 and 2001, 2004 <2010, and finally target journal papers in the citing field “Operations Research & Management Science” revealed higher contribution to recent citing papers (2007, 2010) than in the past (2001, 2004).

This study has the significance in the way that its subject-field approach was taken to estimate influence of paper by study of citing paper as opposed to earlier studies of cited papers. Subjective influence has been studied to show statistical variance based on publication year.

References

VOSviewer (2014) Centre for Science and Technology Studies, L. U. (2014). VOSviewer. Retrieved from http://www.vosviewer.com/ , Retrieved from http://www.vosviewer.com/

U.S. News & World Report Education rankings. U.S. News & World Report. Retrieved from http://www.usnews.com/rankings , Retrieved from http://www.usnews.com/rankings

SCOPUS (2014) Elsevier (2014). SCOPUS. Retrieved from http://www.scopus.com , Retrieved from http://www.scopus.com

Bibexcel: A tool-box developed by Olle Persson (2011) Persson (2011). Bibexcel: A tool-box developed by Olle Persson. Retrieved from http://www8.umu.se/inforsk/Bibexcel/ , Retrieved from http://www8.umu.se/inforsk/Bibexcel/

Publication and citation analysis of disciplinary connections of library and information science faculty in accredited schools. 3270003 () (2007) Ann Arbor: University of Illinois at Urbana-Champaign Pluzhenskaia, M. A. (2007). Publication and citation analysis of disciplinary connections of library and information science faculty in accredited schools. Ann Arbor: University of Illinois at Urbana-Champaign. 3270003:180. , p. 180

Journal citation reports: JCR social sciences () (2014) Thomson, I. (2014). Journal citation reports: JCR social sciences. Retrieved from http://webofknowledge.com/JCR , Retrieved from http://webofknowledge.com/JCR

Web of Science () (2014) Thomson, I. (2014). Web of Science. Retrieved from http://apps.webofknowledge.com/ , Retrieved from http://apps.webofknowledge.com/

QS Top Universities University rankings. QS Top Universities. Retrieved from http://www.topuniversities.com/university-rankings , Retrieved from http://www.topuniversities.com/university-rankings