1. INTRODUCTION

Crowdsourcing harnesses large groups of online users to address specific problems (Doan, Ramakrishnan, & Halevy, 2011). In the domain of mobile content, crowdsourcing has become a major way of populating information-rich online environments such as digital libraries. Examples include content creation to describe locations of interest, and creating/verifying maps.

Crowdsourcing projects may employ volunteers to perform tasks but recruitment and retention are challenging since volunteerism is dependent on individuals’ willingness to devote their time and effort (Yuen, Chen, & King, 2009). Paying for expertise is a good alternative (e.g. Deng et al., 2009) but is potentially costly, and this approach is confined to those projects backed with adequate funding. Further, it is often difficult to ascertain an appropriate monetary amount with which to incentivize workers in relation to the task complexity (Ipeirotis & Paritosh, 2011). Therefore, alternative motivational mechanisms need to be considered to widen the appeal of crowdsourcing projects to a larger group of users.

One possibility is the use of computer games to attract participants for crowdsourcing projects because of the popularity of this entertainment medium. According to statistics reported by Entertainment Software Association (ESA, 2016), 59% of Americans have played computer or video games. Further, 48% of the most frequent players have played social games and 36% of them have played mobile games. Also known as human computation games, or crowdsourcing games, players contribute their intelligence to a given endeavor through enjoyable gameplay (Goh & Lee, 2011). For example, in the context of crowdsourcing mobile content, players in Geo-zombie (Prandi et al., 2016) map urban elements with categories from a predefined list and create additional information such as descriptions and photos to earn points. These points are then exchanged for ammunition to shoot zombies that are trying to attack the players. Crowdsourcing games have also been developed in other contexts, including tagging of images (von Ahn & Dabbish, 2004), generation of common-sense facts (Vannella, Jurgens, Scarfini, Toscani, & Navigli, 2014), annotation of textual resources (Poesio, Chamberlain, Kruschwitz, Robaldo, & Ducceschi, 2013) and creating metadata for music clips (Dulačka, Šimko, & Bieliková, 2012).

The increasing popularity of crowdsourcing games has attracted research interest, primarily focusing on game design issues (Tuite, Snavely, Hsiao, Tabing, & Popović, 2011), with some work on usage motivations (Han, Graham, Vassallo, & Estrin, 2011) and player perceptions (Pe-Than, Goh, & Lee, 2014). A gap present is that actual content creation patterns of crowdsourcing games have not been investigated adequately. Of the few examples, Goh, Lee, and Low (2012) conducted a content analysis of the contributions made in a mobile crowdsourcing game to uncover motivations for sharing, while Celino et al. (2012) found that the number of contributions in a mobile game for linking urban data was relatively high.

Understanding actual usage is essential for verifying the outcomes of design and perceptions research, as well as for identifying challenges that users face while using these applications (Rogers et al., 2007). However, a shortcoming of prior work is the lack of comparative analysis of contributions against other systems. Further, the layering of games into crowdsourcing applications could modify usage behavior because of the entertainment element not found in non-game-based versions. Thus, would a non-game-based crowdsourcing app yield different types of contributions against a game-based app?

We therefore aim to address this research gap by fulfilling two objectives in the present study. First, we develop two crowdsourcing apps for mobile content creation: a game-based version that employs a virtual pet genre where players rescue pets, and a non-game version. Second, we compare both apps to shed light on actual usage patterns, focusing on the types of content created by users. Understanding the nature of crowdsourced content and differences across application types would translate into a better understanding of user behavior, leading to better designed applications that would benefit users and foster sustained usage.

2. RELATED WORK

2.1. Crowdsourcing Games

Crowdsourcing may be defined as the act of gathering a large group of people to address a particular task through an open call for proposals via the Internet (Schneider, de Souza, & Lucas, 2014). Set in this context, crowdsourcing games may be considered dual-purpose applications that perform tasks and offer entertainment concurrently. A well-known example is the ESP Game (von Ahn & Dabbish, 2004) whose purpose is to label/tag images, an activity that is considered difficult for computers to perform but easy for humans, although potentially tedious. Two randomly-paired players on the Web are shown the same image. Players have to guess the terms that might be used by their partners to describe that image, and both will be rewarded when their terms match. Through gameplay, players produce labels as byproducts that can be harnessed to improve image search engines.

The accessibility of content and services afforded by mobile devices has led to the development mobile crowdsourcing games. Location-based crowdsourcing games are typical examples that collect content about real-world locations. Eyespy (Bell et al., 2009) generates photos and texts of geographic locations useful in supporting navigation or creating tourist maps. Players take pictures of locations and share them with others who then have to determine where these pictures were taken. Points are awarded for producing more recognizable images and for confirming the images of other players.

Next, using geospatial data from OpenStreetMap, Urbanopoly (Tuite et al., 2011) challenges players to participate in mini-games in which information creation or verification tasks are embedded so they can conquer the venues and become landlords. Through gameplay, players contribute geospatial data that is useful for other locative services. In SPLASH, locations are represented by pets and players “feed” content so that they can evolve (Pe-Than et al., 2014). The appearance of pets change depending on the quality, quantity, recency and sentiment of content fed. The content associated with pets describes the respective locations and is accessible by other players. Finally, GEMS or Geolocated Embedded Memory System (Procyk & Neustaedter, 2014) allows players to document location-based stories for personal reflection and for future generations to find. Players receive directives from a game character. They can complete these directives by creating a memory record (multimedia content) to capture a particular experience and the place of origin. As players create records, they earn points and access tokens that can be used to unlock secret information about the game character.

2.2. Understanding Usage Patterns

As mentioned earlier, there is a dearth of work on usage patterns in crowdsourcing games. Of very little work done in this area, Celino et al. (2012) investigated whether players use a mobile location-aware game to contribute the most representative or meaningful photos related to points of interest (POIs) in Milan. In particular, the authors introduced a mobile game called UrbanMatch in which players are presented with a photo of a POI from a trusted source, and they have to link it to other photos from non-trusted sources. If more players couple an untrusted photo with the trusted one, the former becomes a strong candidate to represent the POI. Based on the contributions of 54 UrbanMatch players who tested 2006 links, the authors found that 99.4% of links between a trusted source and untrusted source were correct. Additionally, Bell et al. (2009) examined the images contributed via Eyespy (described earlier) to uncover nine categories including those relating to shops, signs, and buildings. These categories were consistent with the games’ purpose of generating content for aiding street navigation. The authors also compared the photos contributed in Eyespy against similar photos in Flickr, and found that the former were more helpful for navigation.

Next, Procyk and Neustaedter (2014) organized content contributed in GEMS into different axes including writing style (e.g. accounts of specific events or descriptions of a location's significance) and location types (e.g. public places, countries/cities, vehicles). Interviews with participants suggested that the act of creating and accessing location-based stories in GEMS was useful in learning and connecting with the places being described. Further, in an analysis of crowdsourced SPLASH content, Goh, Razikin, Chua, Lee, and Tan (2011) found 15 categories of contributions ranging from food, emotions, places of interest as well as people. Interestingly, nearly 10% of the content was nonsensical in nature. Two possibilities were mooted, with one being that users were new to the game and were testing its functionality with short posts. However, another was that users attempted to "game" the system since contributing content earned points. This was verified by follow-up interviews where some users admitted to wanting to earn as many points as possible to either purchase in-game items and/or rise in rankings. Finally, Massung, Coyle, Cater, Jay, and Preist (2013) compared three mobile crowdsourcing apps to collect data on shops for pro-environmental community activities. One app used points and badges as virtual rewards for data collected, another used financial incentives, while another served as a control without any incentives. Results suggest that the quality of the data collected was similar across all apps, but the app with financial incentives yielded the most data, followed by the app that used points and badges, and the control.

In summary, prior research has mainly focused on the analysis of content generated by a single application. Further, some studies compare crowdsourcing applications with different types of in-game rewards regarding the quantity and quality of content created by these applications. In contrast, our research aims to provide a holistic understanding of crowdsourcing games through a comparative analysis of content contributed by the game-based and non-game applications.

3. APPLICATIONS DEVELOPED

We developed two mobile apps for crowdsourcing location-based content for this research: Collabo, a collaborative crowdsourcing game; and Share, a non-game app. The reasons for developing our own apps were that we would have better control over the look-and-feel of the interfaces and easy access to the contributed content.

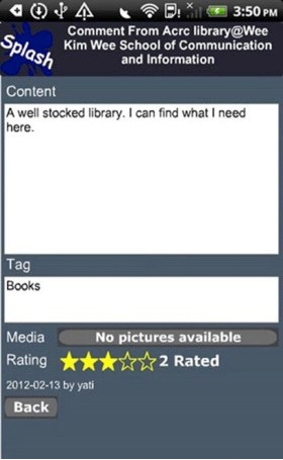

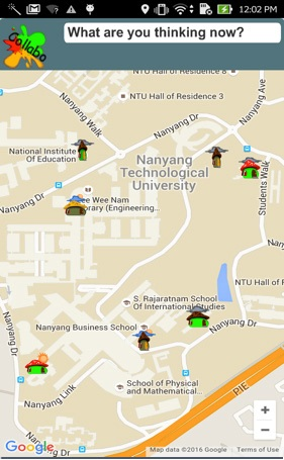

Both mobile apps shared a similar purpose of crowdsourcing location-based content. The content model was based on an earlier crowdsourcing game we developed called SPLASH which is briefly described here. More details about SPLASH may be found in (Goh et al., 2011). Crowdsourced content are known as “comments”, comprising a title, tags, descriptions, media elements (e.g. photos) and ratings (see Figure 1). SPLASH organizes content on two levels: “places” and “units”. Places represent geographic points of interest such as buildings and parks, and each of these can be divided into more specific units, which hold the respective comments. For instance, if a mall is considered as a place, a particular store within it will be one of its units containing comments. Both mobile apps offer a map-based interface to facilitate creation of, and access to, comments. Specifically, places are indicated by map markers in the shape of mushroom houses (see Figure 2). Each house has a number of units, and each unit contains the crowdsourced comments.

Returning to the two apps, Collabo is a virtual pet game that asks players to form a team with others to rescue starving pets that live in units within places. Once a place is selected, the game shows a list of pets where starving ones appear sad and dark in color (See Figure 3). To rescue a pet, players need to feed it with comments or rate those created by others on a five-star scale. Pets being rescued are anchored by a “star” which signals other players to join the rescue team. Once a pet is rescued, the game allocates an equal amount of points to the team members and displays a winning message (refer to Figure 4). Collabo differs from the original SPLASH in that the latter offers more features such as avatars, virtual chat rooms and mini-games. In the present study, we wanted to have a simpler game to make better comparisons against a non-game variant. This meant focusing only on the feeding of virtual pets and excluding the other game-based features of SPLASH. In doing so, both Collabo and Share (described next) would have one primary task of generating mobile content.

Share is a non-game-based mobile app for crowdsourcing content that serves as a control. It does not have any game elements, and offers commonly found features for contributing and accessing mobile content. A user accesses content by tapping on a mushroom house on the map, after which a list of units is presented. When a unit is selected, a list of comments associated with it is displayed (Figure 5). Users can view and rate a comment by tapping on it. They can also create new comments by tapping on a button displayed in the top panel of each unit. Players are not awarded with any game points or rewards for their activities. Instead, they can view statistics such as the number of comments and ratings created.

4. METHODOLOGY

4.1. Participants

A total of 160 participants, of which 89 were female, were recruited from two large local universities. Their ages ranged from 21 to 46 years (M=23 years, SD=3.77). Prior studies suggest that university students can represent an important age demographic of not only online game players but also mobile Internet users (e.g., Wu & Liu, 2007; Kirriemuir, 2005). Therefore, we contend that our sample may reasonably represent the population that is involved in online games.

Participants were from backgrounds such as Computer Science (47.8%) and engineering (38.8%) while the remainder were from arts and social science. In the sample, 88.1% surfed the Web via their mobile phones while 80.2% used them for map navigation. Further, 72.5% used social network applications to share text and multimedia information via their mobile phones, while 52.5% used the location check-in feature of such applications. The majority of the participants (81.3%) indicated that they were game players.

4.2. Procedure and Analysis

Prior to the study, participants attended a 30-minute briefing session. The usage of each app was demonstrated, followed by a short practice session. Usage scenarios involving rescuing pets (for Collabo), as well as creating, browsing, and rating content (for Share) were presented to help participants better understand the apps.

Participants were required to use both applications on Android-based mobile phones on two different days, each spaced one day apart. The study was counter-balanced to account for possible sequence effects: half the participants used Collabo followed by Share, while the other half used Share followed by Collabo. They could either use their own mobile phones or borrow ones from the researcher. Participants using their own phones had to ensure these phones met minimum requirements in terms of screen size and processor power to ensure a similar user experience. Participants were also told to use the application at any time where convenient to create and rate content, but the minimum usage was two half-an-hour sessions a day.

All comments created using the two mobile apps (Collabo and Share) were extracted, analyzed and coded via an iterative procedure common in content analysis (Neuendorf, 2002). The unit of analysis was a comment. First, content was classified based on categories derived from prior related work (e.g. Goh et al., 2012; Naaman, Boase, & Lai, 2010). Next, for those not classifiable into these categories, we inductively constructed new ones by identifying similarities across entries and coding them into logical groupings (Heit, 2000). This addition of new categories required that entries that were previously categorized be reviewed to check if they needed to be reclassified. This process is repeated till all comments could be consistently categorized. Categories and their definitions were recorded in a codebook where they were fully explained to coders.

In our study, two coders were independently involved in the content analysis procedure. The intercoder reliability using Cohen’s kappa was found to be 0.841 for Collabo and .927 for Share. These values are above the recommended average (Neuendorf, 2002).

5. RESULTS

A total of 3323 comments were contributed. Surprisingly, although participants used both apps, there were more comments for Collabo (2359) than Share (964). Table 1 shows the set of 10 categories derived. New categories uncovered were “App-related” and “Complaints and suggestions”. A description of these categories is presented in the following paragraphs, together with excerpts from relevant comments contributed.

Notably, the category that yielded the greatest difference between the two apps was "App-related", postings about the software or gameplay. In Collabo, it attracted the largest proportion of comments, at 30.52% of all contributions. Comments were used as a means to achieve Collabo's objective of saving pets, and thus contained little informational value apart from commentary about the gameplay experience. Examples include "this game is quite hard", "it needs food", and "rate this comment to feed it". In contrast, this category was the second-smallest in Share at 2.59%. It appeared that without a gaming objective, participants did not see much need to create content related to the app. As in Collabo, comments were primarily commentary about the usage experience such as "this is my first session, same for everyone?"

For Share, the category that attracted the largest proportion of comments was "Places" (26.35%), and was a strong second for Collabo (18.19%). Comments for both apps attracted descriptions of locations that participants found interesting. Examples included a recommendation of a park for taking photos ("beautiful place that reminds me of the Qing dynasty") and another suggestion of a place to study ("Fantastic place to study or chill with friends during long breaks between lessons.").

The next few higher-ranked categories which attracted 10% of comments or more for at least one mobile app were:

• "Activities and events" (7.08% for Collabo, 15.25% for Share), which refer to descriptions of activities or events shared by participants ("they have a calligraphy show now"). Comments made were from the participant's perspective, and may contain opinions about what happened or will happen (e.g. "it was super crowded" when referring to an event).

• "Status updates" (8.82% for Collabo, 13.28% for Share), which referred to making personal updates in relation to the current location. This may involve what the participant did or is currently doing ("time to eat lunch!", "bye I'm going home"), or how he/she feels or thinks ("so sleepy", "feeling stressed").

• "Food" (7.63% for Collabo, 10.48% for Share), which discussed food and food-related establishments. Typical comments were recommendations ("you should try the spinach quiche"), food reviews ("The brownies are really good. The staff are friendly too..."), or experiences about the establishments visited ("Wish there were greater variety of food!").

• Queries (8.22% for Collabo, 10.06% for Share), where participants asked questions or requested for assistance about various locations. For example, a participant wondered about a fountain that never seemed to operate asked, "have wondered everyday why that fountain doesn't work?" Another asked about shopping discounts ("is there any promotion going on right now?").

Categories that attracted less than 10% of contributions for either mobile app were:

• Pleasantries (7.21% for Collabo, 8.71% for Share), which included greetings, well-wishes and other polite remarks (e.g. "hello friends", "see you perhaps later. Thanks for helping out :)"). These were associated with specific locations, often with the expectation that certain participants would visit and reciprocate. Thus, user names were also sometimes included in such comments ("hello 61", where 61 referred to a particular user ID).

• Complaints and suggestions (4.41% for Collabo, 6.02% for Share), which involved participants making complaints ("not enough chairs available during lunch time", "wish there was a bus stop so I don't have to work so far") or sharing suggestions pertaining to specific locations ("bring a jacket! very cold there brr", "please no take out, save plastic!")

• Humor (3.40% for Collabo, 5.50% for Share), which were jokes or humorous remarks, often related to a location. Examples included "Went twice. No one. Great place to make out #justkidding").

At the other end of the scale, "Spam" was surprisingly the smallest category for Share (1.76%) and third smallest for Collabo (4.54%). This category refers to comments with meaningless words or irrelevant content. Such comments were used as a quick means to make contributions with little effort. Examples include terms such as "mfjdjd" or punctuation characters ("?????").

6. DISCUSSION

Participants using Collabo created more than double the number of comments (2359) than those using Share (964). Since all participants used both apps, this suggests that our game-based approach to crowdsourcing could better motivate contributions. This concurs with prior work demonstrating that the enjoyment derived from gameplay fostered further usage of crowdsourcing games (Pe-Than et al., 2014).

Further, while both apps yielded similar categories of contributions, their proportions differ, indicating that the features afforded by our game shaped behavior differently from the non-game-based approach. In particular, Collabo produced more “App-related” content than Share. As noted previously, such content served to achieve the game's objective of rescuing pets via feeding. Unsurprisingly, an examination of the contributions indicates that most were low in information value in terms of describing/discussing specific locations. Nevertheless, they may serve an alternative purpose of socializing through the types of postings made (Lee, Goh, Chua, & Ang, 2010). For example, one Collabo player pleaded with other players to save a pet “SOMEONE SAVE IT BY RATING PLS”. When the pet was rescued, another player exclaimed “thanks for the rescue!!!” An outcome of such contributions is that they may create a mini-community lasting the duration of a gameplay session, thus serving to attract and sustain interest in the game (Goh et al., 2012).

In contrast, Share did not offer any incentives to contribute content. Correspondingly, participants produced fewer contributions and there was also little need to create app-related posts. Even when such posts were created, contributions did not garner any replies, signaling an absence of community unlike in Collabo. For example, a participant created a post that asked "this is my first session, same for everyone?", but it did not attract any responses. Consequently, the majority of contributions were personal expressions related to using Share and were non-conversational. Examples include a participant who compared Collabo’s gameplay with Share, "oh my now no pets" and another who happily noted the conclusion of his/her participation, "woohoo last session".

A further comparison of the proportions of categories in Table 1 show that in most cases, Share had a larger percentage than Collabo. This was due to the fact that for the Collabo, the "App-related" category was 28 times larger than Share. In other words, this category attracted comments that could have otherwise been made elsewhere. This again reinforces the notion that Collabo players likely sought an efficient way of generating content to rescue pets.

We were somewhat surprised that there was a low quantity of nonsense or "Spam"-related posts in both apps (less than 5% for Collabo and Share). Two reasons are postulated. First, the novelty of the apps in the study could have made participants less prone to generate nonsensical content, and perhaps leading them to put in more effort to contribute to the community of players. Second, other categories of content created lessened the need for spam-related postings. These included the "App-related" category especially for Collabo, but also others such as "Status updates", "Humor", and "Pleasantries". In other words, instead of posting meaningless or irrelevant content, players treated both apps as an alternative social media platform to share whatever came to mind, much like Twitter (Humphreys, Gill, Krishnamurthy, & Newbury, 2013). The primary difference was that the contributions were mostly tied to specific locations. Specifically, contributions were about the player himself/herself, or in relation to other players or other non-playing individuals, with the location as a backdrop for expression. Examples include a participant who arrived at a building and posted "I never knew such a cafe existed" or another waiting for a train and pleaded to no one in particular, "train pls don't breakdown". The total percentage of posts within the "Status updates", "Humor" and "Pleasantries" categories comprised 19.43% for Collabo and 27.49% for Share.

In contrast, categories of crowdsourced contributions that conform more to the notion of location-based content being utilized as a means to learn about a specific place or for navigational purposes include "Activities and events", "Complaints and suggestions", "Food", "Places", and "Queries". These categories constitute 45.53% of total content in Collabo and 68.15% in Share. Contributions included what a particular location was about, what could be found there, what could be done, what was happening there, as well as an assessment or review of the location. Examples include "... oh and you guys have to try green tea latte with a shot of espresso " and " … but lighting at night is quite difficult to get it right for photographs…”. Additionally, this group of categories had a larger differential across apps than those described previously for personal expression. Speculatively, we reason that to contribute meaningfully to describe locations, participants had to be familiar with what a particular place had to offer. This was less of a requirement for content related to personal expression since it primarily required drawing from one's own experience, thoughts and feelings. Thus, because participants in our study were tasked to rescue pets by sharing posts, they perhaps turned to personal expression.

7. CONCLUSION

We contribute to the understanding of behavior surrounding crowdsourcing games by analyzing the content created by users of two mobile apps. The following implications may be drawn based on our findings.

One, games can potentially attract participation in crowdsourcing tasks because of the enjoyment derived through gameplay. As shown in our study, greater participation is achieved by the generation of more content over the non-game-based alternative (Share). Further, recruitment of new players through word-of-mouth may occur, as suggested by prior work showing that enjoyment of crowdsourcing games increases the likelihood of them being recommended to others (Goh et al., 2012). Two, while games may better encourage participation, the contributions may not always conform to the intended goal of the crowdsourcing task. This is because the introduction of a game changes users' behaviors as they respond to the excitement and challenge of accomplishing its objectives (Goh & Lee, 2011). Three, there are myriad reasons for participating in crowdsourcing (Schenk & Guittard, 2011), and some of these motivations may not always align with the objectives of the project. The use of games may introduce or enhance unintended motivations which could dampen the original crowdsourcing effort.

A practical implication of our work is that developers of crowdsourcing games need to carefully balance entertaining game design and task design since an over-emphasis in one may lead to a weakening of the other. One way to address this issue is via concerted messaging that stresses the intended goal of the crowdsourcing game so that players are fully aware of their primary objective during gameplay, which is to produce useful contributions. A second approach is to incorporate quality control mechanisms (e.g. ratings, reviews) as well as player reputation systems that emphasize quality over quantity of contributions. Next, providing support to accommodate players' various motivations beyond content sharing in crowdsourcing games would be helpful. This includes features for building communities where relationships can be established, categorization of contributions to facilitate future access, as well as customization of player profiles to support self-presentation.

There are some shortcomings that could limit the generalizability of our findings. First, our participants were recruited from local universities, most came from technical disciplines and were game players. Outcomes could differ with participants from other backgrounds. Second, our crowdsourcing game was based on a virtual pet genre with rules which may have influenced our participants’ behavior. Investigating other game genres would be helpful to ascertain if our content categories are stable. Further, we did not examine the quality of contributions, and such a comparative analysis would be useful in future work. Finally, our study was also carried out within a short period of time in which all participants were new to the application. This may introduce novelty effects that could influence our findings. A longer-term study may yield different outcomes and would be a worthwhile area of investigation.

References

Entertainment Software Association (2016) Essential facts about the computer and video game industry http://www.theesa.com/wp-content/uploads/2016/04/Essential-Facts-2016.pdf

(2005) Parallel worlds-online games and digital information services D-Lib Magazine http://www.dlib.org/dlib/december05/kirriemuir/12kirriemuir.html