ISSN : 2287-9099

Development of Evaluation Perspective and Criteria for the DataON Platform

Abstract

This study is a preliminary study to develop an evaluation framework necessary for evaluating the DataON platform. The first objective is to examine expert perceptions of the level of DataON platform construction. The second objective is to evaluate the importance, stability, and usability of DataON platform features over OpenAIRE features. The third objective is to derive weights from the evaluation perspective for future DataON platform evaluation. The fourth objective is to examine the preferences of experts in each evaluation perspective and to derive unbiased evaluation criteria. This study used a survey method for potential stakeholders of the DataON platform. The survey included 12 professionals with at least 10 years of experience in the field. The 57 overall functions and services were measured at 3.1 out of 5 for importance. Stability was -0.07 point and usability was measured as -0.05 point. The 42 features and services scored 3.04 points in importance. Stability was -0.58 points and usability was -0.51 points. In particular, the stability and usability scores of the 42 functions and services provided as of 2018 were higher than the total functions were, which is attributed to the stable and user-friendly improvement after development. In terms of the weight of the evaluation point, the collection quality has the highest weight of 27%. Interface usability is then weighted 22%. Subsequently, service quality is weighted 19%, and finally system performance efficiency and user feedback solicitation are equally weighted 16%.

- keywords

- evaluation perspective, evaluation criteria, research data platform, DataON, Digital Library

1. INTRODUCTION

In the era of the Fourth Industrial Revolution, where data is the center of research, interest in research data is hot. Devices ranging from small sensors to large laboratory instruments produce data called “crude oil” in real time. The era of data-intensive science, where so-called data is the central tool for research, has arrived. As Adams et al. (2017) argues, there is much to prepare for library users in the age of data-intensive science. This is why libraries and information centers should actively participate in the open science movement, which is embodied in open access, open data, and open repositories. In recent years, data is at the heart of the open science movement. In the field of academic research, this data is called research data.

Research data is the data that is collected, observed, or created for purposes of analysis to produce original research results. Research data has significant value in responsible research, which refers to the ability to justify conclusions on the basis of the data acquired and generated through research and that is furnished to other researchers for scrutiny and/or verification (Singh, Monu, & Dhingra, 2018). Research data is an essential part of the scholarly record, and management of research data is increasingly seen as an important role for academic libraries (Tenopir et al., 2017).

The open science movement aims to provide access to and use of publicly funded research data without legal, financial, or technical barriers, leading to various forms of activity in major industrialized countries. Funding agencies, libraries, publishers, and researchers are involved in these activities. As a representative activity, data management plans (hereafter referred to as DMP) have become commonplace, and DMP has begun to be piloted in September 2019 in Korea. The DMP addresses questions about research data types and formats, metadata standards, ethics and legal compliance, data storage and reuse, data management responsibility assignments, and resource requirements (Barsky et al., 2017).

In Korea, the policy direction for research data and DMP has recently been determined. According to the Republic of Korea’s Ministry of Science and ICT (2019), the government’s R&D budget in 2020 was raised to 24.1 trillion won, an increase of 17.3%. In the case of one of the major investment areas of the budget, the data economy revitalization and utilization-based upgrading sector, the big data platform will support the continuous creation of public and industrial innovation services through the supply of high quality data required by the market. In addition, Korea plans to create an ecosystem that creates value at all stages of data accumulation, distribution, and utilization through the linkage between platforms.

As part of this initiative, the Korea Institute of Science and Technology Information (hereinafter referred to as KISTI) is developing the Korea Open Research Data platform (DataON, https://dataon.kisti.re.kr/). DataON, which is being developed to systematically collect, manage, preserve, analyze, publish, and service research data produced in Korea, is developed by benchmarking OpenAIRE in Europe. OpenAIRE ( https://www.openaire.eu) has been building research data platforms for more than 12 years, from 2008 to 2020. The world’s fastest and systematic research data project is under way. OpenAIRE has the largest research (meta) data corpus in the world. It has more than 36 million research and related data sets and provides search service. As of December 2019, it has 31.2 million publications, 1.6 million research data components, 110,000 softwares, and 3.1 million other contents, and as of 2018, it develops and services 42 features. Since 2018, DataON has been developing into KISTI’s own project with the support of the Ministry of Science and ICT. The pilot service was developed from June 2018 and completed pilot operation in June 2019, recently completing the first phase of construction in December 2019. It serves 1.8 million overseas data and 1,000 domestic data sets. KISTI has developed all the features and services of OpenAIRE at a large category level. It also provides a research data analysis environment that is not provided by OpenAIRE.

Such platforms should have a variety of features to enable them to create more value. For example, a developer may want to use the open application programming interface (API) or data provided by the platform. Administrators may want to host data managed by their organization on the platform. In particular, institutions that are small in terms of budget and manpower are likely to make such demands. Researchers may wish to submit research data they produce or collect directly to the platform and have a permanent access to their data. In addition, researchers may wish to use the platform’s data analysis tools, or may wish to use researcher community services. Research funding agencies may use statistical data related to national research data policy implementation and national data governance.

As mentioned above, the DataON platform is related to users of various interest groups. The platform evaluation should be continuously conducted from the platform construction stage to the final operation and function update. Thus, in order to evaluate the DataON platform, evaluation should be conducted using the evaluation viewpoints and evaluation criteria that are considered by various stakeholder groups. According to Saracevic (2004), the evaluation framework should include well-defined evaluation criteria. It should also include valid and reliable measurement methods. Digital library evaluation is characterized by the integration of quantitative and qualitative evaluation. The research data platform can apply the same data collection method and evaluation method used to evaluate the digital library platform. However, since the core content provided by the DataON platform is research data, there may be differences in the viewpoints and criteria of evaluation even if the existing evaluation framework is used. The purpose of this study is fourfold. The first objective is to examine expert perceptions of the level of DataON platform construction. The second objective is to evaluate the importance, stability, and usability of DataON platform features over OpenAIRE features. The third objective is to derive weights from the evaluation perspective for future DataON platform evaluation. The fourth objective is to examine the preferences of experts in each evaluation perspective and to derive unbiased evaluation criteria. This study uses the evaluation framework of Xie (2006, 2008), which is used to evaluate digital library platforms. It investigates the weighting of the evaluation points presented by Xie to the stakeholder groups of the platform, and presents additional evaluation points and evaluation criteria.

2. PREVIOUS RESEARCH

The research data platform aims to provide researchers with research data services. The research data platform has the key functions of collecting, storing, managing, preserving, and publishing data. Thus, it is very similar to the digital library platform. However, only content and users are specialized in the field of research.

Unlike traditional library evaluation, digital library platform evaluation uses interface design, system performance, sustainability, user effect, and user participation (Xie & Matusiak, 2016). Digital library platforms need to adapt to the rapidly changing user needs and digital environment. Through this, the functions and services should be included in the digital library platform. For this reason an only way to evaluate a digital library platform cannot exist, and it must be evaluated from various angles. The same applies to the research data platform.

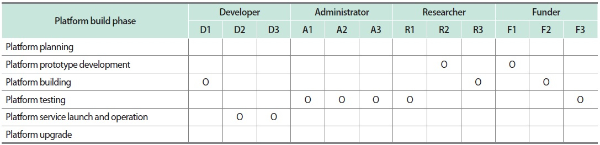

Platform evaluation involves detailed evaluation of the value and meaning of the platform. The evaluation of the platform is a systematic evaluation of how much the platform is achieving its intended purpose. It should be assessed in terms of how much the stakeholder group’s needs related to the platform are being accommodated. Evaluation should also weigh its direction. There should be assessment of whether it is being developed and operated to meet platform objectives. Meanwhile, guidelines are needed for systematic evaluation. For example, guidelines for evaluation objectives, timing of evaluation, evaluation targets, evaluation methods, and how the evaluation results are reflected in the platform development process are needed (Xie & Matusiak, 2016). Among them, the purpose of evaluation is very important because it is the basis of platform evaluation and the foundation of evaluation. Platform building includes planning phases, prototype development, platform building, platform testing, platform service launch and operation, and platform upgrade processes. At each stage, the platform can be evaluated. Thus, each stage may have a different objective. In addition, evaluation criteria and measurement methods may be different depending on the evaluation purpose (Buttenfield, 1999). In addition, evaluation criteria and methods are different depending on what is evaluated.

On the other hand, research on the evaluation framework for evaluating digital libraries has been progressing since the advent of digital libraries, as follows. According to Xie and Matusiak (2016), Tsakonas, Kapidakis, and Papatheodorou (2004) suggested usability, usefulness, and system performance as evaluation criteria. Saracevic (2004) presented content, technology, interfaces, processes/services, users, and contexts from an evaluation perspective. Fuhr et al. (2007) developed the Digital Library Evaluation Framework by incorporating Saracevic’s four perspectives (construct, context, criteria, and methodology) and key questions related to why, what, and how. Candela et al. (2007) presented content, functionality, quality, policy, users, and architecture from an evaluation perspective. Xie (2006, 2008) presented collection quality, system performance efficiency, interface convenience, service quality, and user feedback from an evaluation perspective. Zhang (2010) presented content, description, interface, service, user, and context as an evaluation perspective. Zhang also conducted further research and validation of Saracevic’s evaluation framework from the perspective of digital library interest groups (administrators, developers, librarians, researchers, and user groups). Tsakonas and Papatheodorou (2011) presented effectiveness, performance measurement, service quality, outcome assessment, and technical excellence as an assessment perspective. Lagzian, Abrizah, and Wee (2013) presented six evaluation points consisting of resource, motivation, location, process, people, and time and 36 evaluation criteria as evaluation points.

The core entities of the DataON platform are systems, collections, services, interfaces, and users. DataON platform evaluation needs to evaluate the system itself that composes the platform, and also needs to evaluate the data collection built into the system. Logical collection composition is provided by various types of services, and services can be accessed through various interfaces. The user using the interface may be a researcher or a system. Considering the core entities of the above platform, it was determined that the weight of each viewpoint can be investigated by using the evaluation viewpoint presented by Xie among various evaluation frameworks presented in the previous research.

3. RESEARCH METHOD

This study is a preliminary study to develop an evaluation framework necessary for evaluating the DataON platform. This study used a survey method for potential stakeholders of the DataON platform. The survey included 12 professionals with at least 10 years of experience in the field. The survey was composed of three software developers, three data managers, three researchers, and three staff from the funding organization. In order to understand the DataON platform, KOR platform function evaluation was also performed against OpenAIRE function.

To examine expert perceptions of the level of the DataON platform building phase, the platform building phase (platform planning, platform prototyping, platform building, platform testing, platform service launch and operation, and platform upgrade) proposed by Buttenfield (1999) was used. For the review of DataON platform function compared to OpenAIRE function, the importance, stability, and usability of each function were evaluated on a 5-point scale.

In order to derive weights from the evaluation point of view and to further propose evaluation criteria, a user-driven evaluation model developed by Xie (2006, 2008) was used. Analytic Hierarchy Process (hereinafter referred to as AHP) method, known as a famous multi-criteria decision-making method to gain the weight of evaluation perspectives, was used also.

4. RESEARCH DATA PLATFORM CAKE AND STAKEHOLDERS

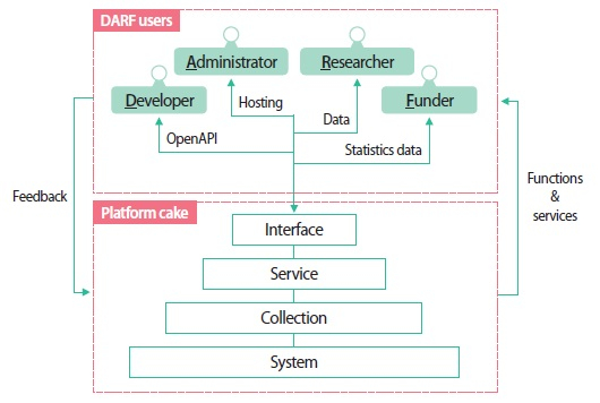

Xie (2006, 2008) proposed a user-driven evaluation model. The evaluation criteria were presented from five perspectives: interface convenience, collection quality, service quality, system performance efficiency, and user feedback. In this study, the hierarchical structure of the platform corresponding to Xie’s point of view is constructed as shown in Fig. 1.

Fig. 1.

Research data platform and its stakeholders. Developers may want to use the open application programming interface (API), data, etc. Administrators may want to use the platform’s resource for their data to be hosted. A researcher may want to reuse the research data, analytic tools, community services, and so on. A funder may want to use the statistics data, etc. which can give the insights related to national research data policy building. Platform cake is a hierarchical representation of Xie’s evaluation perspective.

The bottom of Fig. 1 shows the hierarchy of the platform. In this paper, this hierarchy is called platform cake. The platform cake has a system layer at the bottom and a collection layer above it. A collection is a logical organization of resources served by the platform. These collections are provided as services that work in a variety of ways. Thus, the service is located above the collection hierarchy. The service is provided as an interface of the platform, such as a search service performed by a user or an open API that is used systematically.

The top of Fig. 1 represents the stakeholder group surrounding the DataON platform. In this paper, the developer group, manager group, researcher group, and research fund support group are set as the core interest groups of the DataON platform.

Developer groups are very interested in APIs that can leverage research data or metadata managed by the platform. Types of developers can range from developers developing systems from other institutions that can work with the platform, to individual developers developing mashup services. A group of managers can deposit large amounts of research data from their institutions on the DataON platform. Administrators can also hope that platform data is retrieved from their institution’s search service. A group of researchers can deposit their research data into the DataON platform. Research funding groups may be interested in the management and utilization of research data generated from research grants.

5. NATIONAL RESEARCH DATA PLATFORM: DATA ON EVALUATION PERSPECTIVES AND CRITERIA DEVELOPMENT

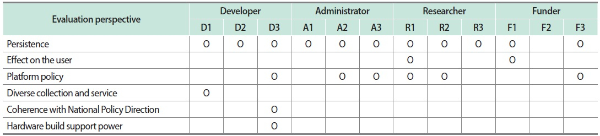

The survey was conducted on the same experts who participated in the evaluation of OpenAIRE and DataON functions and services. We investigated the current DataON platform construction stage. The survey results are shown in the table below. Table 1 shows the recognition status of each stakeholder group about the platform construction stage. Two experts judged the level of DataON at the prototype development stage, and three experts considered the platform construction stage. Five experts judged it to be a test stage and two experts judged it to be a service start-up and operation stage. The data manager group determined that all DataON levels were in the testing phase. Taken together, DataON is currently at the platform building and testing stage.

Experts provided evidence for evaluating the level of the platform. The following is a summary of the developer group’s comments on the platform. First, the implementation of basic functions is considered to be completed, and it is necessary to collect the base data for DataON operation. Second, it is concisely built on essential functions as a national research data platform and seems to focus on stable operation. Third, DataON needs to develop additional functions of OpenAIRE.

The following is an aggregate of comments from the management group on the platform. First, it seems that the platform has been established and the service has been started, but if it is diagnosed by referring to the contents and user status currently registered, it is determined that a certain group of users is testing it. Second, there is not much data construction, so it seems a bit too much to service in general. It would be better if an additional test is performed for each user, and more data needs to be built. Third, not all services are currently working, and there are errors and possibilities for improvements in the interface.

The following is a summary of researchers’ opinions on the platform. First, more features need to be added for platform services, and errors need to be corrected. Second, although it shows the function implemented, it is necessary to reflect the opinion of many demands for service. Third, the frame of the platform is established, but functional improvement is in progress.

The following is an aggregate of opinions from a group of funding agencies on the platform. First, it is judged as a function definition and implementation stage. Second, after the relevant data DB is built, it is not verified, so it is judged as the platform construction stage. Third, it is estimated that there will be many trial and errors and inquiries when the researcher requests data registration and actually conducts the management operation in the research institute.

5.1. DataON Analysis

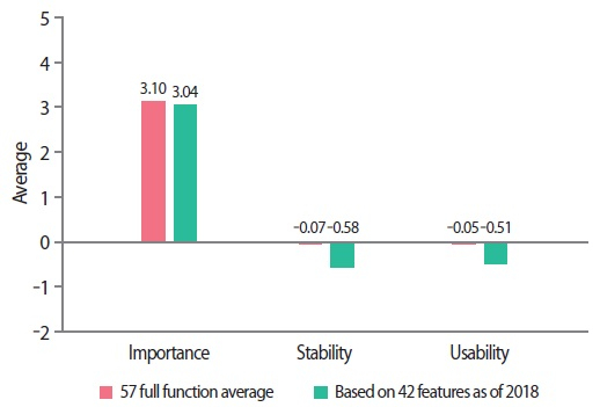

In order to analyze the functions and services of the DataON platform, we used importance, stability, and usability as the criteria for evaluation. Significance refers to the major extent of the function or service given the purpose of the research data platform. Stability refers to the degree to which a function or service operates reliably as intended. Usability refers to the degree to which features or services are easy to use. In the evaluation method, the importance, stability, and usability of each function and service were measured on a 5-point scale. Fig. 2 shows the result of comparing the importance, stability, and usability of the functions and services of OpenAIRE and DataON. The functions and services provided by OpenAIRE and DataON are composed of a total of 57 by allowing duplicates. As of 2018, OpenAIRE is providing 42 functions, and 21 of them (50%) are also known to be provided by DataON. Meanwhile, DataON provides 15 functions that are not provided by OpenAIRE. Likert scale 5-point scale was used to evaluate the importance, stability, and usability of DataON platform function compared to OpenAIRE function. The importance was measured from 1 to 5 points and the stability and usability from -2 to 2 points. The closer the importance is to 5 points, the higher the importance. If the stability and usability are -2, OpenAIRE is very good. In addition, it is designed to mean that DataON is very excellent in the case of 2 points.

Fig. 2.

Comparison of the importance, stability, and usability of functions and services of OpenAIRE and DataON.

The importance, stability and usability of all 57 functions were measured. In addition, we measured the importance, stability, and usability of 21 features implemented in DataON among 42 features of OpenAIRE, which were developed in 2018. All 57 functions were rated 3.06 out of 5 in importance. Stability was measured at 0.11 points and usability at 0.05 points. The 21 functions scored 3.12 in importance. Stability was 0.25 point and usability was measured to be 0.27 point. The importance is more than three points, and the functions being developed in OpenAIRE and DataON can be judged to be developed and operating well, reflecting the requirements of stakeholder groups. Both stability and usability are close to zero. Thus, there is no big difference between the functional stability and ease of use of OpenAIRE and DataON. However, when looking at the scores of the 21 features and services in DataON, which are minor differences, the DataON scores were slightly higher. This is because DataON is developing benchmarking of OpenAIRE function and applying the latest web technology and new requirements.

5.2. Analytic Hierarchy Process Design and Execution

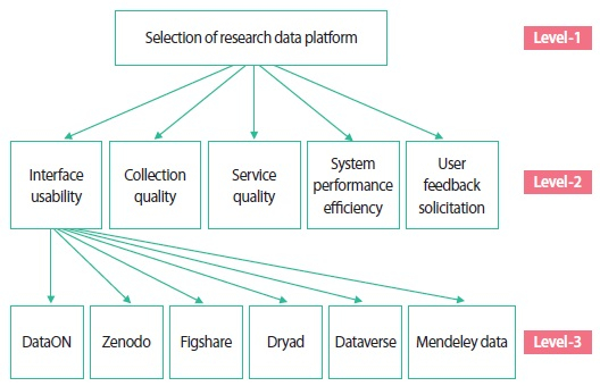

As the first step in AHP, the hierarchical structure with a goal at the top level, the attribute/criteria at the second level and alternatives at the third were developed. Fig. 3 shows the hierarchical structure model for AHP analysis. Level 1 proposed the selection of a research data platform. As a component of Level 2, the evaluation viewpoint developed by Xie (2006, 2008) was used without modification. In addition, Level 3 uses various research data platforms that can replace DataON, including DataON.

Twelve experts in the DataON platform’s stakeholder group responded to the questions of the relative importance of perspectives for evaluating the platform, assuming platform selection. A normalized pair-wise comparison matrix was created with the help of scale of relative importance. The scale is like as below.

-

• 3 - Very important

-

• 2 - Important

-

• 1 - Equal important

-

• 1/2 - Not important

-

• 0 - Not important at all

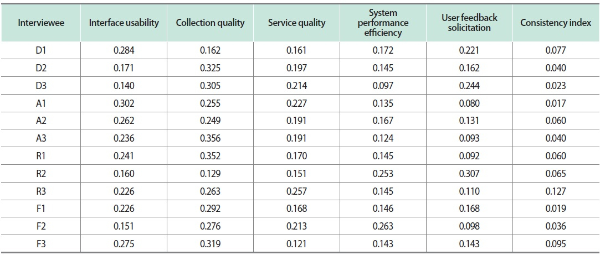

Table 2 shows a normalized pair-wise comparison and consistency index matrix prepared by normalizing the questionnaire data. Of the 12 respondents, 11 responded consistently with a consistency index of 0.1 or less. Only one researcher had a slightly higher consistency index of 0.127. In this study, the data of one researcher with a relatively high consistency index was considered as meaningful data and included in the weighted average calculation by evaluation criteria.

Table 2.

Normalized pair-wise comparison and consistency index matrix related to evaluation perspectives

D, developer; A, administrator; R, researcher; F, funder.

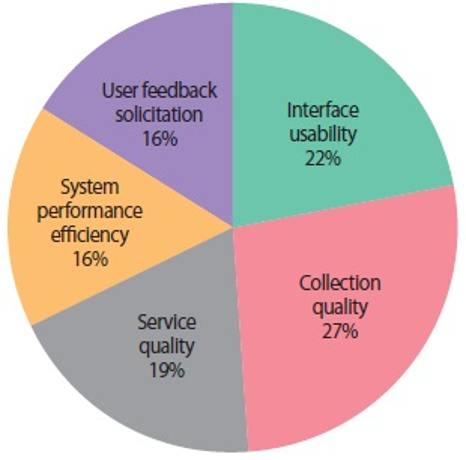

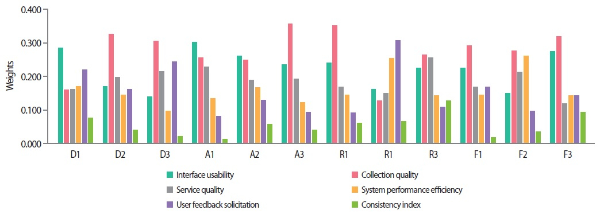

Fig. 4 and Table 3 below show the results of surveying the weight of the platform evaluation perspective for the stakeholder groups related to the DataON platform. In Fig. 4 and Table 3, ‘D’ means developer, ‘R’ means researcher, ‘A’ means administrator, and ‘F’ means funder.

Fig. 4.

Weights for the evaluation perspectives for evaluating the platform by stakeholder groups related to the DataON platform. D, developer; A, administrator; R, researcher; F, funder.

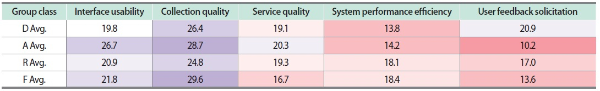

Table 3.

Evaluation point weight of each group, expressed in tones

D, developer; A, administrator; R, researcher; F, funder; Avg., average.

Fig. 4 shows the weights of each evaluation perspective used to evaluate the platform. It is shown by respondents in the stakeholder group related to DataON platform. The consistency index that shows whether respondents consistently responded to the weighting survey by evaluation point of view is as follows. The administrator group responded most consistently with a consistency index average of 0.04. The developer and funder groups responded consistently, following the administrator group, with a consistency index average of 0.05. On the other hand, the average of the consistency index of the researcher group was 0.08, which is a big difference from other groups. This is due to the slightly higher 0.127 consistency index that a researcher responded to.

Table 3 shows the weights of the evaluation points for evaluating the platform by the group of questionnaire respondents in the stakeholder group related to the DataON platform, in tones of color. The darker the purple, the higher the weight. The closer to red, the lower the weight. White is close to the middle of the weight. The developer group assigned the highest weight of 26.4% to the collection quality point of view, followed by the next highest weight of 20.9% to the user feedback solicitation point of view. The lowest weight gave 13.8% to the system performance efficiency perspective. The administrator group gave 28.7% of the top weight to the collection quality perspective, followed by 26.7% of the next highest weight to the interface usability perspective. As the lowest weight, 10.2% was given to the viewpoint of user feedback solicitation, which was given the second highest weight in the developer group.

All DARF groups gave the highest weights in terms of collection quality. The administrator, researcher, and funder groups, except the developer group, gave the next highest weight to the interface usability perspective. Only the developer group gave the next highest weight to the user feedback solicitation perspective. In contrast, the administrator, researcher, and funder groups gave the lowest weight to the user feedback solicitation perspective.

Fig. 5 shows the weighted data of each stakeholder group by evaluation perspective. Collection quality has the highest weight with 27%. Interface usability is then weighted 22%. Subsequently, service quality is weighted 19%, and finally system performance efficiency and user feedback solicitation are equally weighted 16%.

5.3. Investigating Preferences and Evaluation Criteria by Expert Group on Evaluation Perspectives

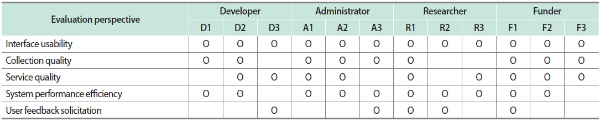

Table 4 shows preferred evaluation viewpoints by stakeholder groups of the DataON platform. In terms of interface usability, the DARF experts preferred it. On the other hand, only five of 12 experts preferred user feedback solicitation. In terms of collection quality, both administrators and funders were preferred, and developers and researchers were preferred by two and one, respectively. In terms of service quality, the entire funder was preferred. In terms of system performance efficiency, the entire group of administrators and researchers were preferred.

Table 5 shows the evaluation perspectives further suggested by the experts. The persistence perspective was proposed by eleven former experts, and the platform policy perspective was proposed by six experts. In addition, the effect on the user view, diverse collection and service, coherence with national policy direction, and hardware build support power were proposed.

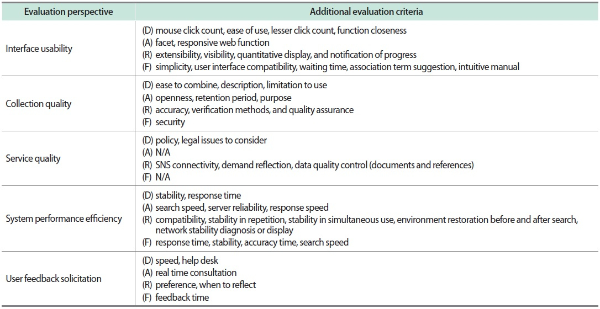

Table 6 shows the evaluation criteria proposed by experts in response to Xie’s evaluation viewpoint for each stakeholder group of the DataON platform. In terms of interface usability, the simplicity of the interface and the response speed of web functions are proposed. In terms of collection quality, openness and usage restrictions, verification, and security related to the use of content have been proposed. In terms of service quality, social networking service (SNS) linkages and policies have been proposed. In terms of system performance efficiency, stability, response time, and search speed are proposed.

Table 6.

Suggesting evaluation criteria corresponding to Xie’s evaluation viewpoints for each stakeholder group of DataON platform

D, developer; A, administrator; R, researcher; F, funder; N/A, not applicable; SNS, social networking service.

In this study, the evaluation criteria were examined without providing Xie’s evaluation criteria to experts. Thus, there may be criteria that overlap with Xie’s evaluation criteria. The results of this study may be used in further derivation and weighting studies of evaluation criteria, which will be conducted in subsequent studies.

6. DISCUSSION

According to Mayernik (2015), institutional support for data and metadata management in a single organization or discipline is uneven. Thus, for organizations or academic fields that require national support in terms of size or budget, a research data platform such as DataON is essential. Various platforms such as DataON are being created by the efforts of countries and nonprofit organizations to support open science. For example, in the area of biodiversity research, the GFBio project addresses the challenges of data management caused by the size and heterogeneity of data. The GFBio project is collaborating with museums, archives, biodiversity researchers, and computer scientists to align with the data life cycle (Diepenbroek et al., 2014). The multi-disciplinary research data platform is the Open Science Framework (OSF). According to Foster and Deardorff (2017), the OSF is a tool that promotes open, centralized workflows by enabling capture of different aspects and products of the research lifecycle, including developing a research idea, designing a study, storing and analyzing collected data, and writing and publishing reports or papers. DataON is Korea’s national research data platform. Thus, it is necessary to continuously benchmark the functions presented by OSF. Compared with the function of OSF, DataON provides research data analysis function which is not provided by OpenAIRE. However, it does not provide a function to support writing a report or a thesis. In particular, the community functions of DataON need to support the research data lifecycle. In other words, if the researcher’s idea development and research design are supported from the beginning, it will be able to secure many users and activate the platform.

On the other hand, according to Tenopir et al. (2017), many European libraries offer or plan to provide consulting rather than technical or practical research data service at the time. This may be considered to mean the expansion of a platform that can directly consult researchers beyond the platform’s ability to provide an environment for storing and reusing research data due to the maturity and generalization of science and technology. Thus, it is necessary to further develop platform functions related to this, and further research on evaluation viewpoint and evaluation criteria is needed.

7. CONCLUSION

In this study, we conducted a preliminary study to develop an evaluation framework for evaluating the DataON platform under development in Korea. The survey was conducted with 12 experts from the stakeholder group surrounding the DataON platform. DataON is currently at the platform building and testing stage. As of 2018, OpenAIRE is providing 42 functions, and 17 of them (40.5%) are also known to be provided by DataON. As a result, the 57 overall functions and services were measured at 3.1 out of 5 for importance. Stability was -0.07 point and usability was measured as -0.05 point. The 42 features and services scored 3.04 points in importance. Stability was -0.58 points and usability was -0.51 points. Both criteria were measured with negative scores in stability and usability, indicating that the OpenAIRE function is more stable and convenient to use. In particular, the stability and usability scores of the 42 functions and services provided as of 2018 were higher than the total functions, which is attributed to the stable and user-friendly improvement after development.

As the first step in AHP, the hierarchical structure with a goal at the top level, the attribute/criteria at the second level and alternatives at the third were developed. Twelve experts in the DataON platform’s stakeholder group responded to the questions of the relative importance of perspectives for evaluating the platform, assuming platform selection. All DARF groups gave the highest weights in terms of collection quality. The administrator, researcher, and funder groups, except the developer group, gave the next highest weight to the interface usability perspective. Only the developer group gave the next highest weight to the user feedback solicitation perspective. In contrast, the administrator, researcher, and funder groups gave the lowest weight to the user feedback solicitation perspective. When the weighted values of each stakeholder group’s evaluation points are combined, collection quality has the highest weight with 27%. Interface usability is then weighted 22%. Subsequently, service quality is weighted 19%, and finally system performance efficiency and user feedback solicitation are equally weighted 16%. On the other hand, in the research on additional evaluation points, the persistence, platform policy, effect on the user view, diverse collection and service, coherence with national policy direction, and h/w build support power were proposed. The simplicity of the interface and the response speed of web functions, openness and usage restrictions, verification, and security related to the use of the content, SNS linkages and policies, stability, response time, and search speed are proposed as an evaluation criterion.

This study proposed weights for evaluation points for evaluating DataON from experts in the interest group surrounding the DataON platform, and suggested additional evaluation points and examined evaluation criteria. Thus, the results of this study can be used as basic data in developing an evaluation framework for the DataON platform.

References

NMC Horizon report: 2017 library edition. (, , , , , , ) ((2017), Retrieved November 3, 2019) Adams Becker, S., Cummins, M., Davis, A., Freeman, A., Giesinger Hall, C., Ananthanarayanan, V., … Wolfson, N. (2017). NMC Horizon report: 2017 library edition. Retrieved November 3, 2019 from https://www.issuelab.org/resources/27498/27498.pdf. , from https://www.issuelab.org/resources/27498/27498.pdf

UBC Research data management survey: Health sciences: Report. (, , , , , , ) ((2017)) Barsky, E., Brown, H., Ellis, U., Ishida, M., Janke, R., Menzies, E., … Vis-Dunbar, M. (2017). UBC Research data management survey: Health sciences: Report. Retrieved from University of British Columbia website: https://open.library.ubc.ca/cIRcle/collections/ubclibraryandarchives/494/items/1.0348070. , Retrieved from University of British Columbia website: https://open.library.ubc.ca/cIRcle/collections/ubclibraryandarchives/494/items/1.0348070

, Diepenbroek, M., Glöckner, F. O., Grobe, P., Güntsch, A., Huber, R., König-Ries, B., ... Triebel, D. (2014, September 22-26). Towards an integrated biodiversity and ecological research data management and archiving platform: The German federation for the curation of biological data (GFBio). In E. Plödereder, L. Grunske, E. Schneider, & D. Ull (Eds.), , Informatik 2014, (pp. 1711-1721). Gesellschaft für Informatik e.V., , Towards an integrated biodiversity and ecological research data management and archiving platform: The German federation for the curation of biological data (GFBio). In E. Plödereder, L. Grunske, E. Schneider, & D. Ull (Eds.), Informatik 2014, 2014, September 22-26, Gesellschaft für Informatik e.V.

Announcement of 2020 ministry of transitional and monetary affairs budget and government R&D budget. (Ministry of Science and ICT) ((2019), Retrieved November 9, 2019) Ministry of Science and ICT. (2019). Announcement of 2020 ministry of transitional and monetary affairs budget and government R&D budget. Retrieved November 9, 2019 from http ://www.korea.kr/common/download.do?fileId=187894400&tblKey=GMN. , from http://www.korea.kr/common/download.do?fileId=187894400&tblKey=GMN

, Saracevic, T. (2004, October 4-5). Evaluation of digital libraries: An overview. In M. Agosti, & N. Fuhr (Eds.), , DELOS WP7 Workshop on the Evaluation of Digital Libraries, (pp. 13- 30). Springer., , Evaluation of digital libraries: An overview. In M. Agosti, & N. Fuhr (Eds.), DELOS WP7 Workshop on the Evaluation of Digital Libraries, 2004, October 4-5, Springer, 13, 30

, Singh, N. K., Monu, H., & Dhingra, N. (2018, February 21-23). Research data management policy and institutional framework. , 2018 5th International Symposium on Emerging Trends and Technologies in Libraries and Information Services (ETTLIS), (pp. 111-115). IEEE., , Research data management policy and institutional framework., 2018 5th International Symposium on Emerging Trends and Technologies in Libraries and Information Services (ETTLIS), 2018, February 21-23, IEEE, 111, 115

, Tsakonas, G., Kapidakis, S., & Papatheodorou, C. (2004, October 4-5). Evaluation of user interaction in digital libraries. , DELOS WP7 Workshop on the Evaluation of Digital Libraries, (pp. 45-60). Elsevier., , Evaluation of user interaction in digital libraries., DELOS WP7 Workshop on the Evaluation of Digital Libraries, 2004, October 4-5, Elsevier, 45, 60

Evaluation of digital libraries. In I. Xie, & K. K. Matusiak (Eds.), Discover digital libraries (, ) ((2016)) Amsterdam: Elsevier Xie, I., & Matusiak, K. K. (2016). Evaluation of digital libraries. In I. Xie, & K. K. Matusiak (Eds.), Discover digital libraries (pp. 281-318). Amsterdam: Elsevier. , pp. 281-318

- Submission Date

- 2020-01-19

- Revised Date

- Accepted Date

- 2020-04-09

- Downloaded

- Viewed

- 0KCI Citations

- 0WOS Citations