JOURNAL OF INFORMATION SCIENCE THEORY AND PRACTICE

- 권한신청

- P-ISSN2287-9099

- E-ISSN2287-4577

- SCOPUS, KCI

Cognitive Biases and Their Effects on Information Behaviour of Graduate Students in Their Research Projects

Hamid R. Jamali (School of Information Studies, Charles Sturt University)

Abstract

Cognitive biases can influence human information behaviour and decisions made in information behaviour and use. This study aims to identify the biases involved in some aspects of information behaviour and the role they play in information behaviour and use. Twenty-five semi-structured face-to-face interviews were conducted in an exploratory qualitative study with graduate (MA and PhD) students who were at the stage of their dissertation/thesis research. Eisenberg & Berkowitz Big6TM Skills for Information Literacy was adopted as a framework for interviews and the analysis was done using grounded theory coding method. The findings revealed the presence of twenty-eight biases in different stages of information behaviour, including availability bias (affects the preference for information seeking strategies), attentional bias (leads to biased attention to some information), anchoring effect (persuades users to anchor in special parts of information), confirmation bias (increases the tendency to use information that supports one’s beliefs), and choice-supportive bias (results in confidence in information seeking processes). All stages of information seeking were influenced by some biases. Biases might result in a lack of clarity in defining the information needs, failure in looking for the right information, misinterpretation of information, and might also influence the way information is presented.

- keywords

- cognitive biases, information behaviour, information use, research, students

1. INTRODUCTION

Information behaviour involves processing information and making decisions, which are cognitive processes, and therefore it is interesting to study them from a cognitive approach (Fidel, 2012, p. 129). One of the cognitive phenomena that might be involved in information behaviour is cognitive biases. We already know that people might not follow rational or normative models of decision making and that biases can have a negative impact on decision outcomes (Kahneman, Slovic, & Tversky, 1982).

The term ‘cognitive bias’ was introduced in the 1970s in order to “describe people’s systematic but purportedly flawed patterns of responses to judgment and decision problems” (Wilke & Mata, 2012, p. 531). Cognitive biases occur because human cognition has limited abilities to properly attend to and process all the information that is available (Kruglanski & Ajzen, 1983). In the past, researchers have discovered that humans rely on some simplifying strategies, or rules of thumb (called heuristics), for decision making. As Bazerman and Moore (2012, p. 6) stated, heuristics are standard rules that implicitly direct our judgment. They are mechanisms for coping with the complex environment surrounding our decisions. Although they are generally helpful, using them sometimes results in serious errors. In a decision-making context, bias is not being prejudicial and unwilling to have an open mind; rather, it refers to “an unconscious inclination toward a particular outcome or belief that can affect how humans search for and process information” (Schmutte & Duncan, 2014, p. 69). Cognitive biases are “similar to optical illusions in that the error remains compelling even when one is fully aware of its nature. Awareness of the bias, by itself, does not produce a more accurate perception. Cognitive biases, therefore, are, exceedingly difficult to overcome” (Heuer, 2007, p. 112). So far researchers have identified a large number of cognitive biases, and the list compiled by Benson (2016) includes about 180 such biases which are categorised into four main groups, including too much information, not enough meaning, a need to act fast, and what should we remember? These are four main issues that affect the way our brain processes information. For instance, ‘too much information’ or ‘what we should remember’ mean that our brain uses tricks to pick the bits of information that are likely to be useful now or in the future.

Knowing which cognitive biases are involved and how they might play a part in seeking, retrieving, gathering, interpreting, and using information can shed light on some of the lesser-known dimensions of information behaviour. While cognitive biases have received some research attention in areas such as health and finance, we do not know enough about them in the general context of information behaviour. When there is no vision of the potential impact of cognitive biases on information behaviour, improving information services, access, and use can be challenging. This study aims to contribute to our understanding of the role of cognitive biases in information behaviour. The study contributes to the thin library and information science literature on cognitive biases as one of the few qualitative studies that explore cognitive biases at different stages of information seeking. To this aim, we investigate cognitive biases in a specific user group (graduate students) and a specific context (their project), so the findings can be better contextualised. More specifically the research seeks to answer two questions:

2. LITERATURE REVIEW

Cognitive approach is a significant approach to human information behaviour, and some of its aspects have been covered in works such as Belkin (1990), Ellis (1989), Kuhlthau (1993), and Ingwersen (1992, 2001). For instance, the relationship between different cognitive styles (learning styles) and search behaviour was studied by Ford, Wood, and Walsh (1994). Cognitive styles (along with demographic factors) are considered in the category of individual differences in studies of information behaviour (Ingwersen & Järvelin, 2005).

One aspect of the cognitive viewpoint is the issue of uncertainty in information behaviour. Humans can rely on some heuristics principles and biases to be able to decide under uncertainty (Tversky & Kahneman, 1974). Some studies such as Ingwersen (1996) have dealt with the role of uncertainty in information behaviour, and Kuhlthau (1993) included uncertainty as a principle effect in her information behaviour model. Wilson, Ford, Ellis, Foster, and Spink (2002) showed that the concept of uncertainty can be operationalised in different stages of information seeking processes so that users can express the degree of uncertainty they have.

Despite some studies on certain cognitive aspects in information behaviour, such as the abovementioned studies, cognitive biases have not received much research attention in information science, and more specifically in information behaviour. However, there have been several studies on biases in some other fields, including accounting and auditing (e.g., Griffith, Hammersley, & Kadous, 2013), business and behavioural finance (e.g., Kariofyllas, Philippas, & Siriopoulos, 2017), information systems (e.g., Arnott, 2006), management (e.g., Pick & Merchant, 2010), health (e.g., Hussain & Oestreicher, 2018), and psychology (e.g., Miloff, Savva, & Carlbring, 2015).

There have been two reviews of bias studies in information systems. One review (Fleischmann, Amirpur, Benlian, & Hess, 2014) covered 84 studies (1992-2012) and concluded that the research in this area was sparse and disconnected and that there was considerable potential for further research. The review showed that most of the studies were in the area of information system usage (70), information system management (27), and software development (11). Overall, 120 biases were examined in the studies, with framing and anchoring being the most commonly examined biases. The review also revealed that researchers used a range of methods for their studies including experiments, surveys, case studies, and interviews. Another review with a focus on software engineering (Mohanani, Salman, Turhan, Rodriguez, & Ralph, 2018) covered 65 papers (1990-2016) that investigated 37 biases with anchoring, confirmation, overconfidence, and availability being the most examined ones.

In health information, a retrospective analysis on search and decision behaviours of 75 clinicians showed that reading the same documents did not result in the same answer by clinicians. The researchers hypothesised that clinicians experience anchoring effect, order effect, exposure effect, and reinforcement effect while searching and these biases might influence their decisions (Lau & Coiera, 2007). A study on undergraduate students showed that applying debiasing strategies to anchoring and order biases influenced their ability to answer health-related questions accurately, as well as the strategies used to conduct searches and retrieve information (Lau & Coiera, 2009). Interviewing with lay individuals revealed that incorrect or imprecise domain knowledge led people to look for health information on irrelevant sites, often seeking out data to confirm their incorrect initial hypotheses due to observed selective perception and confirmation biases (Keselman, Browne, & Kaufman, 2008). Another study (Schweiger, Oeberst, & Cress, 2014) also confirmed the presence of confirmation bias in health-related information searching and that presenting users with tag clouds, including popular tags that challenged their bias, had the potential to counter biased information processing.

Context and tools used for searching information might play a role in biases. Past studies have shown that web searchers are subject to bias from search engines. Search engines, for instance, strongly favoured a particular, usually positive, perspective irrespective of the truth (White, 2013). Search context can make users more susceptible to confirmation bias (Kayhan, 2015). An experiment showed that when disconfirming evidence was identified using a different word or phrase, the search engine would generate a result set consisting mostly of confirming evidence, which in turn would lead to downloading confirming evidence only, and thus making biased decisions (Kayhan, 2015). However, manipulation of tools, such as presenting comprehensible information in Google’s knowledge graph box, could help counter bias information processing (Ludolph, Allam, & Schulz, 2016).

Past studies have also proposed and tested a range of debiasing strategies such as modification of the user interface of search systems (Lau & Coiera, 2009), computer-mediated counter-argument (Huang, Hsu, & Ku, 2012), using tag clouds with tags challenging biases (Schweiger et al., 2014), and adding information to Google’s knowledge graph box (Ludolph et al., 2016). Most of the debiasing strategies applied in information searching studies are of cognitive (e.g., “consider the opposite”) or a technological type (e.g., provision of external tools to improve the decision environment) (Ludolph et al., 2016), and some positive results have been reported for most of them. However, reviews of past studies on biases (Fleischmann et al., 2014; Mohanani et al., 2018) indicate there is still a great need for research on mitigation techniques.

As the above review indicates, cognitive biases have been examined to a certain extent in searching for health information. However, its roles in information behaviour in other contexts and among different user groups are largely unknown. This study contributes to bridging this gap by focusing on some aspects of information behaviour among graduate students.

3. METHOD

A qualitative approach was used due to the exploratory nature of the study. The lack of research on biases in information behaviour makes it difficult to form hypotheses. Therefore, instead of methods such as experimental methods, a qualitative method was used to make it possible to explore and cover a range of biases and various stages of information behaviour. Moreover, qualitative methods and interviews have been used in the past for the study of existence and the effects of biases in other areas, such as software engineering (Mohanani et al., 2018) and information systems (Fleischmann et al., 2014). Participants were 25 graduate students of Kharazmi University (Iran) who were chosen using purposive sampling. The students had to be in the dissertation stage, which is when they had finished their taught subjects and were doing research for their dissertation or were writing it up. Recruitment notes were distributed through bulletin boards on campus and the participants were chosen from those who initially expressed their interest in a way to increase diversity in terms of gender, discipline, and research stage. Participants consisted of 14 women and 11 men students from a range of disciplines including library and information science, mathematics, literature, geography, business administration, international relations, law, accounting, economics, management, and geology. Twenty of the participants were PhD students and five were Masters students. They were between 25 and 38 years old with an average age of 32.

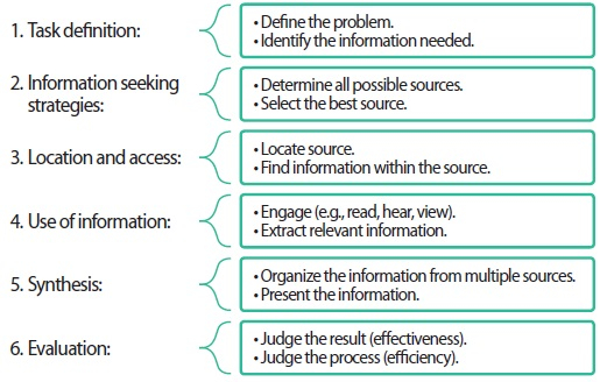

Semi-structured interviews were used for data collection. To guide the interviews, Big6TM Skills for Information Literacy (Lowe & Eisenberg, 2005) was adopted as a framework. This model was developed by Eisenberg and Berkowitz (1990). The model includes a process encompassing six stages from task definition to evaluation (listed below). Although this model is mostly dedicated to information problem solving rather than specifically to information seeking processes or behaviour, it was adopted because its stages roughly cover the stages in a research process that students might go through for doing a dissertation/thesis. The steps or stages are not too broad, nor are they too narrow. Also, as Lowe and Eisenberg stated, it is a flexible process that includes necessary elements for solving problems and completing tasks, and it has the potential for the study of human information behaviour. Moreover, it is not always a linear process and can be applied to any information situation, whether an academic or everyday information problem or need (2005, p. 66). The six stages of information problem solving are presented in Fig. 1 (Lowe & Eisenberg, 2005, p.65).

An interview protocol was developed based on the stages of the Big6 model. A few pilot interviews were conducted to test the interview protocol and as a result, some modifications were made in the interview protocol including its structure. Before interviews, participants were asked to think of real actions they had taken for their research and answer the questions based on their lived experiences. Interviews consisted of a series of questions (overall between 16 to 21 questions) grouped based on the six stages in the Big6 model, plus a few overall questions at the end about their decision making during their research. Questions included items related to interaction with information in the context of students’ projects, successful and unsuccessful search and information access experiences, students’ actions, and decision making throughout the six stages of the model. For instance, for the locating and accessing stage, we asked them how they located and got access to the information resources they had identified and how they made decisions in that regard. Or, for the use of information, we asked them what they did with the information they found, what type of information they considered to use, and if they ignored or avoided any information and why. Interviews were held on campus at a time and location convenient to the participants. They were recorded and transcribed for analysis. Participants received an information sheet and signed a consent form. Interviews took on average about 50 minutes. The data collection was continued until the researchers felt the point of saturation had been reached. Fictional first names have been used in the interview quotations in the results section so the gender of the participants is clear to readers.

Grounded theory coding method was used for data analysis with two main stages of coding the interviews, including substantive coding and theoretical coding, based on Glaser’s approach (Glaser & Strauss, 1967). After transcribing the interviews, each interview was broken down into smaller units of analysis and coding was done in order to reach the main concepts. The data analysis process is nonlinear, and some other sub steps like data comparison on as many dimensions as possible were involved. Based on the aims of the study, the main focus of the researchers during the coding was on identifying the biases and their roles in the students’ information behaviour. Identification of biases was based on definitions of biases and matching the examples of the behaviour of students with the definitions of biases.1 Also, bias identification was based on the whole of the interviews and on all the descriptions which were presented by participants and all conceptualisations of the data, although in this article only some sentences from different interviews have been mentioned as evidence. As an example of the process of the data analysis, an excerpt from one of the interviews is presented below:

-

... once I’ve found the information I’m looking for, I start reading and taking notes. For using the information I check if the information is useful for my work. If there is some piece of information in the work that is important for my thesis and somehow supports the idea, model or hypothesis in my thesis I would highlight it and it catches my interest and I make sure I make note of it…

For the excerpt above, initially codes such as ‘a decision about the use of information,’ ‘a tendency to favor supporting information,’ and ‘the effect of the user’s mentality on selecting information’ were assigned. During the next stage, it was determined that the incident was related to the fourth stage of Big6 (use of information) and that was assigned as a code. Also, the codes were changed to ‘inclination towards information that is aligned with own views.’ At the final stage, the comparison of codes with the definition of the biases resulted in coding the data as the incident of confirmation bias during stage four of information seeking.

To ensure the credibility of the findings, a few actions were taken including doing data analysis twice with a two-month interval to compare the results, and they were largely in accord with each other. The comparison showed the analysis was consistent. Member checking and external audit were also used. For member checking, the analysis of the interview transcripts was sent to the participants about one or two weeks after the interviews and they were asked to check if the interpretations of the interviews were sound. For the external audit, a second researcher was asked to go through the data and the outcome of the analysis to check if the findings and interpretations were supported by the evidence in the data.

To determine a cognitive bias, in many cognitive biases’ sources, like Dobelli (2013), an example of a situation or behaviour has been presented.

4. RESULTS

The interviews revealed the presence of 28 biases in the information behaviour of the participants. Table 1 shows the list of these biases and their effect on information behaviour. For definitions of biases please see the Appendix. The number of participants (3rd column) shows how many participants experienced that bias. Frequency shows the total frequency of the bias in all of the interviews, as each bias might have been present in more than one stage of the information behaviour of a participant. The stages column shows the stages of information behaviour (based on Big6 stages) in which biases occurred. The final column is the effects that an information bias could have on the information behaviour. These biases can be categorised into four groups according to the problems that biases help us address, based on the categorisation proposed by Benson (2016). They include:

-

Information overload (biases No. 1-12);

-

Lack of meaning (biases No. 13-19);

-

The need to act fast (biases No. 20-27); and

-

How to know what needs to be remembered for later (biases No. 28, 5, & 14).

To better understand the data presented in Table 1 and the effects of biases on information behaviour, each stage based on Lowe and Eisenberg’s (2005) model is discussed below. It should be noted that the biases discussed under each stage were not restricted only to that stage. The relationships between biases and stages of information seeking are merely based on the evidence of their presence in each stage that were identified during the analysis (see the coding example above).

4.1. Stage 1: Task Definition

When participants are choosing the subject of their research and defining the topic of their information needs, due to ambiguity aversion they might avoid choosing topics that do not have a rich past literature or avoid research locations with which they are not familiar. For instance, Mary said “I needed to choose a location for my research and I decided to choose this city because I was familiar with that city and I lived there for a few years so I knew its various quarters and areas. If it was another city about which I knew little, what would I do?”

Besides avoiding challenging situations, participants may favour things that bring peace of mind (selective perception). Philip said “I wanted my research topic to be something about which some information already existed. My supervisor suggested a few topics and I checked them and picked the one that looked more familiar to me based on what I already knew and could use. This was because I already knew a few things about the topic and had an overall knowledge of it.”

If participants are overconfident and not fully aware of the limits of their knowledge and skills, they may end up choosing a topic that they do not understand properly. Participants referred to such situations in several interviews; for instance, “the topic was very interesting and professional and I spent a lot of time on it. Although I knew it was a difficult one I started it and after a few months, I realised it was beyond my knowledge and skills” (Mary Anne).

Recency bias sounds like a natural habit in our information behaviour, as we tend to keep in mind recent events. As a result, the books we have recently read or a seminar we have recently attended are information sources that come to our mind when we are thinking about choosing topics. Information resources might be preferred for various reasons including being popular in a community. Bandwagon effect indicates that participants might favour information that is widely favoured by other people. For instance, Philip stated that “of course the topic I am working on is of interest to many people, i.e. many researchers in our field are interested in this topic. In the last few years, many people have done some work on the topic and in a way it is a hot topic in our field and that’s why I chose it.” The interviews showed that the curse of knowledge (Birch, Brosseau-Liard, Haddock, & Ghrear, 2017) also played a role at this stage of information behaviour. Based on their prior knowledge and prior perceptions participants tended to ignore some aspects of the problem and limited themselves to what they already knew.

The other bias in this stage was conservation bias, which means people tend to keep their current perceptions and they do not react to new information. When choosing research topics, participants might not choose what seems to be radical and take action based on their preconceptions. Anchoring bias was also identified at this stage, which indicates participants could not leave what they considered in the beginning and move on. In other words, they anchor on a specific topic that has been formed in their mind for some reason although it may not have the potential for a suitable research topic. Kasey stated that “one thing that I could not get rid of was that I frequently went back to the same topic. I had a specific thing on my mind, which my supervisor suggested and I thought this is the best and I got stuck on it but I could not reach any conclusion on that matter. I wasted a lot of time on that.”

4.2. Stage 2: Information Seeking Strategies

Participants were not inclined to use information sources that were new to them and with which they had no prior experience due to ambiguity aversion. For example, participants insist on their keywords and avoid new ones. Participants might also be overconfident in their methods and strategies for seeking information. Due to the bandwagon effect, participants were in favour of information sources that are prevalent and common or were recommended by their peers. Jessica stated, “it has happened for me and my friends that we use a set of keywords for searching and we frequently see the same results. The keywords are right and I found them based on the information I have and based on my knowledge, however, I learned that there are other things and I need to expand my mind.”

Due to attention bias, participants pay more attention to certain aspects of information (e.g., author’s reputation or affiliation) that affected their choices. When participants are deciding about their search strategies, they do so based on their past experiences in order to choose the best strategies. They consider past successful strategies as those that are more likely to lead to success (availability bias) (Pompian, 2006). An example is: “the most important thing and the first thing I do is to ask one or two of my friends that I know are knowledgeable and experienced to tell me where I can find such information and I frequently found this helpful” (David).

Based on status quo bias it is expected that participants will stick to their search strategies and are reluctant to change their habits. Those who experience this bias use the same websites, information sources, resources, and libraries that they have used in the past: “I always go to a website that I have been using since 2011 when I was a student because I know I can find what I need there” (Ruth).

The participants’ strong preference for using online resources based on the belief that the era of print resources is over indicates belief bias. Another example of belief bias is the prevalent belief among participants that English resources are superior to all of the resources in the Persian language. Besides our dependence on our beliefs, stereotyping (Hinton, 2000) also influences participants’ behaviour. The superiority of English resources can also be considered as a stereotype among Iranian students. For instance, “I don’t read Persian articles and it happens rarely that I consult Persian resources. I only use Persian resources occasionally when I need to say what has been done nationally. However, mostly my preference is English for various reasons, including that foreign authors are more knowledgeable” (Kim).

Information bias refers to the tendency to collect too much information. In today’s world with an overabundance of information, some participants drown in information by continuing extensive searching for information. Ruth said:

-

“I try to look at all resources as much as possible. My personal desire is to go all the way and find every piece of information that exists on the topic. I constantly think there might be more and I should carry on and I look for more and more information. Maybe the next article gives me a different perspective and that’s why I like looking for information. It is self-evident that more information is always better.”

The other identified bias is pro-innovation bias (Rogers, 2010), which leads participants to enthusiastically embrace new technologies and techniques for searching and finding resources. Some of the participants were pro-innovation and tended to use new technologies to obtain information. They consider new technologies to have many advantages and few deficiencies. Reactance plays a part when participants are prevented from accessing certain information resources. As past studies (Jamali & Shahbaztabar, 2017) have shown, in such situations where participants are discouraged or banned from accessing some information, they become keener on accessing such resources and will try harder (reaction to censorship). Mary very firmly stated that “when a person or a group decides that you should not have or read certain information, one becomes more enthusiastic about obtaining that information. No one has the right to decide what I should or should not know.”

Another bias at this stage is the serial position effect, which results in paying more attention to resources that are higher in the list of retrieved resources. Barbara said that “when searching, I think I pay more attention to the first results, not only in Google but in any database, the top results receive more attention and you download those items” (Barbara).

4.3. Stage 3: Location and Access

Similar to the two previous stages, bandwagon effect impacts on participants at this stage, for instance in preference for using online information resources that are recommended by and are popular amongst their peers. Jason stated that “I first search databases that are well known in our field, those that have been around for many years and everybody in our field uses those. One of our lecturers gave us a list of prestigious journals that was pretty exhaustive and we search in those journals and check their websites.”

At this stage, participants use the location for finding and accessing information that they consider more likely to provide access to the information based on their past experiences. This availability bias was evident in several interviews. For instance, “I need to check the websites and look for resources, for instance, I need to use Google Scholar or ScienceDirect. I first use Google Scholar because I’ve used it before and I know that I can find the articles I need so I use it again to find new articles” (Daniel). They also tend to choose information centres or sources with which they are familiar and accustomed to and they avoid any change due to status quo bias. Kasey said, “I think once I have found the resources I wanted I’d go to the special library of our discipline or the special website to get them. I have used them before and I know how to get the results fast. This is better than trying to find a new way.”

Participants choose choices that are aligned with their expectations (selective perception). Peter said “I never go to our university’s library. It is very dull, its staff are impatient. Everything is old and worn out. I like to go to large libraries where you feel comfortable, unlike our university library to which I wouldn’t go even if I know it has the information I need.”

4.4. Stage 4: Use of Information

When encountering and using information, participants may favour some information pieces over others. Several biases may play a role in such situations. Participants might favour information that is aligned with their perceptions and beliefs (confirmation bias). Ethan mentioned that “in the content, specific things might catch my attention. For instance, I am reading something and I see that something, documented or not, is what I was looking for and it confirms my idea and it is something that I agree with, therefore I make sure I use it in my work.” Participants may also prefer information that is simple and unambiguous and easy to understand (illusion of truth effect) (Hirshleifer, 2001). Victor stated that “I disregarded the resources that had complex concepts. But as I said I knew that they were relevant due to the references to them. Overall if the resource has concepts that I find difficult to understand and I can’t figure it out after a few times of reading or if they require a lot of time, I will disregard them.” Visual or graphical information is also often preferred (picture superiority effect).

It is evident that participants may receive information that is negative in relation to their research topic, things such as sad statistics about something and dissatisfaction in people. Participants stated that they paid more attention to such negative information compared to positive information and prefer to extract such information. Julia said: “When I think, I can see that the first thing in a text that gets my attention is the negative news. The negatives are seen more than the positives, negative statistics and news are more unfortunate and sorrowful and should receive more attention.”

Besides the above biases, people put emphasis on specific features of resources and choose the resources based on those that could be due to selective perception or anchoring. An example of selective perception is when participants prefer resources in the Persian language because they want to avoid the challenge of reading English resources, as they do not feel as confident in the English language. For anchoring, Nathan stated that “for this work, I first read a few articles and I understood the overall topic based on those articles. Because I didn’t know much beforehand I moved forward based on the theories I learned from those articles and I put the basis of my work on those theories and I used those as keywords for searching.” Moreover, participants anchor on the quantity of the work required by their university for a dissertation or thesis (e.g., number of pages) and that quantity drives their work. Also, based on conservation bias, participants disregard new information and react better to information that is aligned with their prior perceptions. Joanne said, “I have seen multiple times in the research seminar class that when discussing a topic, some other participants put too much emphasis on one particular resource or work and don’t want to listen to someone else or change their opinion, no matter how many arguments you put forward.”

4.5. Stage 5: Synthesis

When presenting information, participants were influenced by biases such as confirmation bias, illusion of truth effect, picture superiority effect, and bandwagon effect. As a result of confirmation bias, participants tend to present information that confirms their perceptions. Ethan said, “in my opinion, content that I have extracted in the previous stages that are things that are aligned with my opinion, and sound interesting to me and confirm my ideas, I present those in my chapters.” The illusion of truth effect indicates that participants prefer to synthesise, organize, and present the information in a simple way. Participants also prefer to use pictures (picture superiority): “when I am reading, pictures help me more than text so when I am writing I make more use of pictures. If there is no picture, readers have to read and visualise in their mind, which might not be a simple thing to do and might result in mistakes. So I prefer to use pictures when I put myself in readers’ shoes” (Diana). In bandwagon effect (VandenBos, 2007), participants tend to present information that is interesting to more people: “we need to talk about things in our work that are hot topics of the day and are the buzzwords” (Noah).

Framing effect influenced both the format and structure of the content that participants present. Participants might prefer to present information in certain formats and appearances, and/or highlight or play down parts of the content. For example, Daniel said, “sometimes one could see that some information resources present information in certain ways to achieve the desired outcome. They tell you the story the way they want to. I think this is natural and I do it too. I might emphasise part of the content and play down some other parts that might undermine the conclusion of my work. You could present someone’s life story in different ways that result in forming a different opinion in the audience.”

Recency bias and serial position effect explain situations where participants rely on recently obtained information in presenting information. At this stage, these two biases may overlap a bit. Belief bias means participants will easily accept an argument if they find it easy to believe and they may not look at the evidence. For instance, if they believe that the information they present is useful they put a lot of effort into presenting such information.

While participants believed that in many cases the information they presented was clear, their audience thought otherwise and asked for clarifications. This implies the illusion of transparency (Brown & Stopa, 2007), when students think they are very clear about what they present but the reactions and feedback they receive indicate that they have not been successful in conveying their message. One student notes, “It has happened several times when I gave a seminar or in my confirmation presentation, that other students ask questions that I think I have already clearly answered in my text or presentation. But it seems it hasn’t been sufficient, therefore, I am always afraid that in my viva I face criticism that this doesn’t mean what you mean to say…” (Kasey).

4.6. Stage 6: Evaluation

If the person has an unrealistically positive evaluation of the information-seeking process and sees all of the information relevant to his or her information needs, then overconfidence effect might be involved. Diana stated that “I wasn’t after new search strategies and I didn’t want to learn about those, I was pretty sure that the right search strategies were what I already knew and I already used. I wasn’t concerned whether my approach or strategy was correct or incorrect.” Sometimes because of choice-supportive bias (Lind, Visentini, Mäntylä, & Del Missier, 2017), participants approve the validity of the process and its outcome, so they support their own decisions and positively evaluate their actions and behaviour. An example is: “I think overall the process that I went through was good. I mean I think I couldn’t do more. My method is fine, and things I’ve done, places I searched, and the ways I used to access the information are all correct and I reach desired outcomes and this has been the case so far” (Leah).

According to self-serving bias, people often attribute problems with the information-seeking process (and their failure) to external factors (e.g., the education system, time). On the other hand, they attribute their successes to their merits and internal factors. This theme was quite common in the participants’ comments in interviews. Based on false consensus effect, participants think that all other students are in agreement with them about the process of looking for information and they see their beliefs as self-evident. For instance, participants frequently commented in the interviews that everyone agrees about the superiority of online resources to print ones, or that ‘Googling’ is the best and first choice for looking for information. During the information-seeking process and at the stage of evaluation, participants realise that they have not progressed according to their plan and that the process has taken longer than they thought it would. This is due to the planning fallacy (Ehrlinger, Readinger, & Kim, 2016), as many of the issues and challenges are not considered in such planning. Chloe experienced this planning issue and stated that “unfortunately I am in a term when I should have finished half of my thesis based on a standard four-year doctoral period. When I started I thought I would finish in four years and had a lot of plans and always tried to stick to my plans. But now I am behind in most of my plans. Of course, I must say there are factors that are not under my control such as our supervisors and the university’s situation.” When participants were asked if they were satisfied with their information seeking and about their strengths and weaknesses and so on, we see the effect of biases, as most of them would answer that they had done the right things and that they attribute success to their strengths and attribute problems to external factors. They also think that their peers behave in the same way and that they are all in agreement about this.

5. DISCUSSION AND CONCLUSIONS

This study showed the prevalence and diversity of biases in some aspects of information behaviour of graduate students. The findings show how biases as psychological and cognitive factors might influence our information behaviour. Biases can play roles in all aspects of information behaviour from defining the details of information needs, deciding about and selecting information sources, extracting and using the information, organising and sharing obtained information, and in evaluating the process of looking for information.

Similar to findings of this research, several past studies including Lau and Coiera (2007), Keselman et al. (2008), and Blakesley (2016) have highlighted the influence of biases on information behaviour. Although some of the past studies focused on specific biases and some investigated the issue only in the context of searching in the digital environment, we can see that biases such as anchoring, confirmation, and status quo biases that are prevalent among participants in this study were also identified in previous studies. Some of the biases such as availability bias (stages 2 & 3), conservation bias (stages 1, 2, & 4), selective perception (stages 1, 3, & 4), recency bias (stages 1, 4, & 5), bandwagon effect (stages 1, 2, 3, & 5), overconfidence effect (stages 1, 2, & 6), and status quo bias (stages 2, 3, & 5) were present in several stages of information behaviour. Due to the continuity and non-linear nature of the processes of looking for information, it seems normal for biases to play a role in different stages of information behaviour. Some of the biases (e.g., confirmation bias) that affect participants when finding information also affect them when presenting information.

The findings of this study show that the roles that biases play in information behaviour have some consequences. Biases might make users favour some choices and be biased against other choices. In the stage of defining information needs, participants might pay more attention to some issues and ignore or oversee other issues because they are under the influence of biases such as selective perception, bandwagon effect, and ambiguity aversion. Biases also might make participants favour or avoid some choices when selecting and choosing information resources (physical or digital) and referring to information centres (physical or virtual). Biases might also result in search failure: for instance, by sticking to the keywords they already know which may not be the best keywords. They might focus on one dimension of the topic they are familiar with and ignore the other dimensions. Another example of failure is when availability bias encourages participants to favour information sources and resources that they deem more suitable to meet their needs based on their past experiences.

Attentional bias (Baron, 2008), belief bias, framing effect, and stereotyping are among biases that may lead to failure in finding relevant information. This is because participants focus their attention on certain aspects of information and act on that basis. For instance, a user might use authors’ authority or impact factors as proxies for quality rather than considering the quality of the content, or a Persian-speaking student might favour English sources with the assumption that they are superior in terms of the quality of content.

The findings showed that consequences of some of the biases might become evident in extracting, using, synthesizing, and sharing information. Likewise, Lau and Coiera (2007) in their study showed that different users presented different answers to the same questions in similar experimental scenarios while they all received the same information. The conclusion was that they experienced anchoring, exposure, and order biases while searching for information. Similarly, in the current study the participants seemed to have experienced anchoring, negativity (Ito, Larsen, Smith, & Cacioppo, 1998), confirmation, conservation, the illusion of truth, picture superiority (Ma, 2016), and framing biases when extracting and presenting information. The study also found that some of the biases can have negative consequences in the stages of synthesising (organising and presenting) information. Status quo bias and framing effect might make users present the information in a way they are used to and seems desirable to themselves, which may not be the best or correct way of presenting the information. Or, observer-expectancy effect can result in bias in the presentation of information. The study by Lomangino (2016) showed the role of confirmation bias in reporting research findings.

Finally, the consequence of biases in the evaluation of effectiveness and efficiency of the information-seeking process is that users might blame external factors in their failure due to overconfidence effect and other similar biases. As a result, users will not seek to improve their skills because they do not blame their knowledge and skills for the lack of success. This might be also related to the attribution style of users, as those who attribute their success or failure to external factors (compared to those who attribute them to internal factors) experience a lower level of satisfaction and do not aspire to improve their capabilities (Behzadi & Sanatjoo, 2019).

We must note that the presence of biases does not mean that users are always under the influence of such biases. Their role and consequence of their involvement in our behaviour vary based on contextual factors. The results of their influence are not always the same either. Biases and the use of mental shortcuts do not always yield negative outcomes and sometimes they serve as simple ways to achieve the desired outcome. We also need to bear in mind that biases are not the only factor in information behaviour and many factors play a role. Biases might also be related to some of the known concepts of information behaviour such as information avoidance (which might be related to confirmation bias, selective perception, and conservation bias); this has been discussed by Behimehr and Jamali (2020) and requires further investigation.

While this study did not involve any debiasing techniques and concepts, the findings have some implications for debiasing. Designing and implementing debiasing techniques, which can entail changes in user interfaces (Lau & Coiera, 2009) requires knowing which cognitive bias users might experience in each stage of their information seeking. Therefore, studies such as this one are needed for system designers if debiasing techniques are to be implemented in information systems.

This study had some limitations as it focused on a small group of students in the context of a specific task (theses/dissertations). The other issue is that identification of biases which are latent phenomena is not straightforward and one needs to be cautious in attributing biases to certain behaviour of individuals, and this might require benchmarking against objective baselines (Kahneman & Tversky, 1979). Although we have used the evidence in the interviews as an indication of the possible presence of biases, it is far from perfect. The evidence in this study is suggestive rather than in any sense conclusive. Moreover, the study had an exploratory nature and the aim was to explore if and how biases might play a role in information behaviour. Fleischmann et al. (2014, p. 10) argued that although biases are latent phenomena, qualitative and argumentative methods such as interviews are not inappropriate for studying them. We hope that the results of this study can stimulate further much needed studies on the subject.

Future studies should examine biases in different contexts (e.g., the digital environment) and for different user groups. Some of the participants in this study were library and information science students, which could be counted as a limitation of the study. Their research method coursework is similar in nature to that of other disciplines in social sciences. However, they are more exposed to information literacy education. The impact of information literacy education on cognitive biases could be the subject of a future study. The role of culture could also be considered in biases in users from different countries. Possible relationships between cognitive biases and cognitive styles should also be studied. Also, there is a need for more studies on mitigating methods and strategies for the negative impact of biases. In the context of information behaviour and information literacy, increasing awareness of cognitive biases, teaching critical thinking skills, and designing information systems so they expose users to counter bias information might help.

References

Cognitive bias cheat sheet. Better Humans. () ((2016), Retrieved November 22, 2017) Benson, B. (2016). Cognitive bias cheat sheet. Better Humans. Retrieved November 22, 2017 from https ://betterhumans.coach.me/cognitive-bias-cheat-sheet-55a472476b18. , from https ://betterhumans.coach.me/cognitive-bias-cheat-sheet-55a472476b18

Decision-making and cognitive biases. (, , ) ((2016), Retrieved December 17, 2017) Ehrlinger, J., Readinger, W. O., & Kim, B. (2016). Decision-making and cognitive biases. Retrieved December 17, 2017 from https://www.researchgate.net/publication/301662722_Decision_Making_and_Cognitive_Biases. , from https://www.researchgate.net/publication/301662722_Decision_Making_and_Cognitive_Biases

Cognitive biases in information systems research: A scientometric analysis. Paper presented at the European Conference on Information Systems (ECIS), Tel Aviv, Israel. (, , , ) (2014, Retrieved February 20, 2017, 9-11 June) Fleischmann, M., Amirpur, M., Benlian, A., & Hess, T. (2014, June 9-11). Cognitive biases in information systems research: A scientometric analysis. Paper presented at the European Conference on Information Systems (ECIS), Tel Aviv, Israel. Retrieved February 20, 2017 from: http://aisel.aisnet.org/ecis2014/proceedings/track02/5. , from: http://aisel.aisnet.org/ecis2014/proceedings/track02/5

, , ((2013)) Auditing complex estimates: Understanding the process used and problems encountered. SSRN Electronic Journal https://papers.ssrn.com/sol3/papers.cfm?abstract_id=1857175.

, Kayhan, V. (2015, December 13-16). , Confirmation bias: Roles of search engines and search contexts, . Paper presented at the Thirty Sixth International Conferences on Information System (CICIS), Fort Worth, TX. Retrieved August 25, 2017 from: , https://pdfs.semanticscholar.org/2b04/1eb44fd8031596ed8e73124801c5bcf550b1.pdf, ., , Confirmation bias: Roles of search engines and search contexts., Paper presented at the Thirty Sixth International Conferences on Information System (CICIS), 2015, December 13-16, Fort Worth, TX, Retrieved August 25, 2017 from: , https://pdfs.semanticscholar.org/2b04/1eb44fd8031596ed8e73124801c5bcf550b1.pdf

Big6 skills for information literacy. In K. E. Fisher, S. Erdelez, & L. McKechnie (Eds.), Theories of information behavior (, ) ((2005)) Medford: Information Today Lowe, C. A., & Eisenberg, M. B. (2005). Big6 skills for information literacy. In K. E. Fisher, S. Erdelez, & L. McKechnie (Eds.), Theories of information behavior (pp. 63-69). Medford: Information Today. , pp. 63-69

APA dictionary of psychology. () ((2007), Retrieved December 11, 2017) American Psychological Association VandenBos, G. R. (2007). APA dictionary of psychology. American Psychological Association. Retrieved December 11, 2017 from https://dictionary.apa.org/anchoring-bias. , from https://dictionary.apa.org/anchoring-bias

Beliefs and biases in web search. In G. J. F. Jones & P. Sheridan (Eds.), Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval () (2013, 28 July) ACM White, R. (2013, July 28-August 1). Beliefs and biases in web search. In G. J. F. Jones & P. Sheridan (Eds.), Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval (pp. 3-12). ACM. , pp. 3-12

- 투고일Submission Date

- 2019-10-30

- 수정일Revised Date

- 게재확정일Accepted Date

- 2020-05-10

- 다운로드 수

- 조회수

- 0KCI 피인용수

- 0WOS 피인용수